In the not-so-distant future, really big disasters, such as wildfires in California or floods in Spain or an earthquake in Japan will be monitored and perhaps anticipated by a technology so small it is difficult to even imagine.

This new technology, called quantum computing, is enabled by nanotechnology — a way of designing technology by manipulating atoms and molecules. Paradoxically, this ultra small technology enables the processing of massively large data sets needed for complex artificial intelligence algorithms.

There is a growing consensus that AI will quickly change almost everything in the world.

The AIU cluster, a collection of computer resources used to develop, test and deploy AI models at the IBM Research Center upstate New York. (Credit: Enrique Shore)

The AI many people already use — such as ChatGPT, Perplexity and now DeepSeek — is based on traditional computers. To process the data analysis needed to answer questions put to these AI programs and to handle the tasks assigned to them takes an enormous amount of energy. For example, the current energy consumption from OpenAI to handle ChatGPT’s prompts in the United States costs some $139.7 million per year.

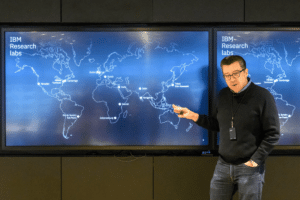

Several large private companies, including Google, Microsoft and IBM, are leading the way in this development. The International Business Machines Corp., known as IBM, currently manages the largest industrial research organization, with specialized labs located all over the world.

Glimpsing the world’s most powerful computers

The global headquarters of IBM Research are located in the company’s Thomas J. Watson Research Center. Located about one hour north of New York City, it is an impressive building designed in 1961 by Eero Saarinen, an iconic Finnish-American architect who also designed the Dulles International Airport in Washington, D.C., the Swedish Theater in Helsinki and the U.S. Embassy in Oslo.

At the entrance of the IBM research headquarters a simple statement sums up what research scientists are trying to achieve at IBM: “Inventing what’s next.”

At the heart of the IBM Research Center is a “Think Lab” where researchers test AI hardware advancements using the latest and most powerful quantum computers. News Decoder recently toured these facilities.

There, Shawn Holiday, a product manager at the lab’s Artificial Intelligence Unit (AIU) said the challenge is scaling the size of semiconductors to not only increase performance but also improve power efficiency.

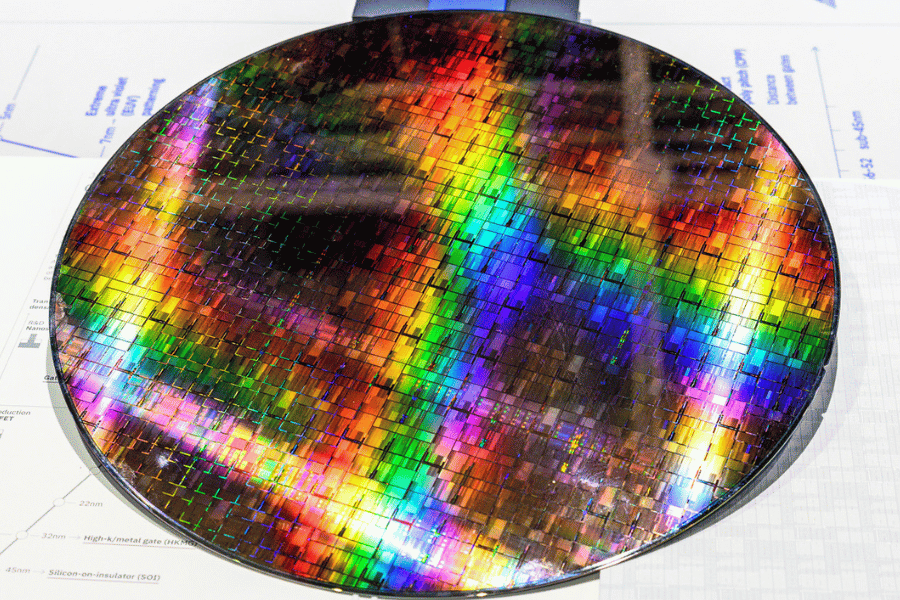

IBM was the first to develop a new transistor geometry called the gate. Basically, each transistor has multiple channels that are parallel to the surface. Each of those channels has a thickness that is about two nanometers. To try to grasp how small this is consider that one nanometer is about a billionth of a meter.

This new technology is not just a faster or better version of traditional computers but a totally new way of processing information. It is not based in the traditional bits that are the basis of modern binary computers (meaning bits can be either in the state zero or one) but in qubits, for quantum bits, a different and more complex concept.

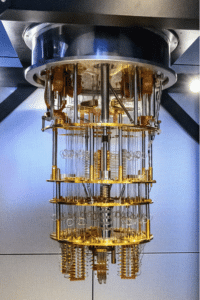

The IBM Quantum System Two, a powerful quantum computer, operating in the IBM Research Center in Yorktown Heights in upstate New York. (Credit: Enrique Shore)

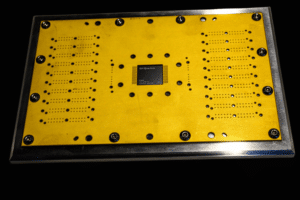

A quantum processor with more gates can handle more complex quantum algorithms by allowing for a greater variety of operations to be applied to the qubits within a single computation.

A new way of processing data

The change is much more than a new stage in the evolution of computers. Nanotechnology has enabled for the first time in history an entirely new branching in computing history. This new technology is exponentially more advanced; it is not just a faster or better version of traditional computers but a totally new way of processing information.

A replica of the IBM Quantum System One, the first quantum computer, on display at the IBM Research Center in Yorktown Heights New York. (Credit: Enrique Shore)

The quantum bit is a basic unit of quantum information that can have many more possibilities, including being in all states simultaneously — a state called superposition — and combining with others, called entanglement, where the state of one qubit is intimately connected with another. This is, of course, a simplified description of a complex process that could hold massively more processing power than traditional computers.

The current architecture of existing quantum computers require costly, large and complex devices that are refrigerated at extremely low temperatures, close to absolute zero (-459º F, or -273ºC) in order to function correctly. That extremely low temperature is required to change the state of certain materials to conduct electricity with practically zero resistance and no noise.

Even though there are some prototypes of desktop quantum computers with limited capabilities that could eventually operate at room temperature, they won’t likely replace traditional computers in the foreseeable future, but rather they will operate jointly with them.

IBM Research is a growing global network of laboratories around the world that are interconnected.

While IBM is focused on having what they call a hybrid, open and flexible cloud, meaning open-source platforms that can interact with many different systems and vendors, it is also pushing its own technological developments in semiconductor research, an area where its goal is to push the absolute limits of transistor scaling.

Shrinking down to the quantum realm

At the lowest level of a chip, you have transistors. You can think of them as switches. Almost like a light switch, they can be off or they can be on. But instead of a mechanical switch, you use a voltage to turn them on and off — when they’re off, they’re at zero and when they’re on, they’re at one.

IBM Heron, IBM Heron, a 133-qubit tunable-coupler quantum processor (Credit: Enrique Shore)

This is the basis of all digital computation. What’s driven this industry for the last 60 years is a constant shrinking of the size of transistors to fit more of them on a chip, thereby increasing the processing power of the chip.

IBM produces wafers in partnership with foundry partners like Samsung and a Japanese startup called Rapidus. Consider that the two-nanometer semiconductor chips which Rapidus is aiming to produce are expected to have up to 45% better performance and use 75% less energy compared to seven-nanometer chips on the market in 2022.

Dr. George Tulevski, IBM Research scientist and manager of the IBM Think Lab, stands next to a world map showing their different labs at the IBM Research Center in Yorktown Heights in New York. (Credit: Enrique Shore)

IBM predicts that there will be about a trillion transistors on a single die by the early 2030s. To understand that consider that Apple’s M4 chip for its latest iPad Pro has 28 billion transistors. (A die is the square of silicon containing an integrated circuit that has been cut out of the wafer).

There may be a physical limit to the shrinking of transistors, but if they can no longer be made smaller, they could be stacked in a way that the density per area goes up.

With each doubling of the trend, there is always a tradeoff of power and performance. Depending on if you tune for power or you tune for performance, with each of these technology nodes, you get either roughly a 50% increase in efficiency or a 50% increase in performance.

A roadmap for advanced technology

The bottom line is that doubling the transistor count means being able to do more computations with the same area and the same power.

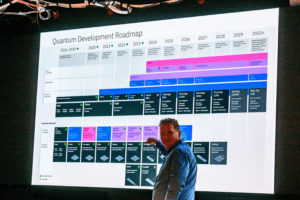

Dr. Jay M. Gambetta, IBM’s Vice President in charge of IBM’s overall Quantum initiative. explains the expected quantum development roadmap. (Credit: Enrique Shore)

The roadmap of this acceleration is impressive. Dr. Jay Gambetta, IBM’s vice president in charge of IBM’s overall quantum initiative, showed us a table that forecasts the processing capabilities increasing from the current 5,000 gates to an estimated 100 million gates by 2029, reaching possibly one billion gates by 2033.

A quantum gate is a basic quantum circuit operating on a small number of qubits. Quantum logic gates are the building blocks of quantum circuits, like classical logic gates are for conventional digital circuits.

But that will radically diminish with the new more efficient quantum computers, so the old assumptions that more capacity requires more power is being revised and will be greatly improved in the near future — otherwise this development would not be sustainable.

A practical example of a current project made possible thanks to quantum computing and AI is Prithvi, a groundbreaking geospatial AI foundation model designed for satellite data by IBM and NASA.

The model supports tracking changes in land use, monitoring disasters and predicting crop yields worldwide. At 600 million parameters, it’s current version 2.0 introduced in December 2024 is already six times bigger than its predecessor, first released in August 2023.

It has practical uses like analyzing the recent fires in California, the floods in Spain and the crops in Africa — just a few examples of how Prithvi can help understand complex current issues at a rate that was simply impossible before.

The impossible isn’t just possible. It is happening now.

Three questions to consider:

1. How is quantum computing different from traditional computing?

2. What is the benefit of shrinking the size of a transistor?

3. If you had access to a supercomputer, what big problem would you want it to solve?