After the latest marathon with the city, which ended without a deal, Philadelphia’s largest blue-collar union, AFSCME Local 33, is moving toward going on strike at 12:01 a.m. Tuesday.

Author: admin

-

A Multiday In-Class Essay for the ChatGPT Era (opinion)

A successful humanities course helps students cultivate critical, personally enriching and widely applicable skills, and it immerses them in the exploration of perspectives, ideas and modes of thought that can illuminate, challenge and inform their own outlooks.

Historically, the out-of-class essay assignment has been among the best assessments for getting students in humanities courses to most fully exercise and develop the relevant critical thinking skills. Through the writing process, students can come to better understand a problem. Things that seem obvious or obviously false before spending multiple days thinking and writing suddenly become no longer obvious or obviously false. Students make up their minds on complex problems by grappling with those problems in a rigorous way through writing and editing over a sustained period (i.e., not just writing in a blue book in one class session).

Unfortunately, since ChatGPT became widely available, out-of-class writing assignments keep becoming harder to justify as major assessments in introductory-level humanities courses. The intense personal engagement with perspectives and cultural artifacts central to the value of the humanities is more or less bypassed when a student heavily outsources to AI the generation and expression of ideas and analysis. As ChatGPT’s ability to write convincing papers goes up, so does the student temptation to rely on it (and so too does the difficulty for professors of reliably detecting AI).

Having experimented very extensively with ChatGPT, I have found that, at least when it comes to introductory-level philosophy courses, the material that ChatGPT can produce with 10 minutes of uninformed prompting rivals much of what we can reasonably expect students to produce on their own, especially given that one can upload readings/course materials and ask ChatGPT to adjust its voice (the reader should try this).

And students are relying on it a lot. Based on my time-consuming-and-quickly-becoming-obsolete detection techniques, about one in six of my students last fall were relying on ChatGPT in ways that were obvious. Given that it should take a student no more than 10 extra minutes on ChatGPT to make the case no longer obvious, I have to conclude that the real number of essays relying on ChatGPT in ways that conflict with academic integrity must be at least around 30 percent.

It is unclear whether AI-detection software is sufficiently reliable to justify its use (I haven’t used it), and—at any rate—many universities prohibit reliance on it. Some instructors believe that making students submit their work as a Google Doc with track-changes history is an adequate deterrent and detection tool for AI. It is not. Students are aware of their track-changes history—they know they simply have to type ChatGPT content instead of copying and pasting it. Actually, students don’t even have to type the AI-generated content: There are readily available Google Chrome extensions that take text and “type” it at manipulable speeds (with pauses, etc.). Students can copy/paste a ChatGPT essay and have the extension “type” it into a Google Doc at a humanlike pace.

Against this backdrop, I spent lots of time over the last winter break familiarizing myself with Lockdown Browser (a tool integrated with learning management software like Canvas that prevents access to and copying/pasting from programs outside of the LMS) and devising a new assignment model that I happily used this past semester.

It is a multiday in-class writing assignment, where students have access through Lockdown Browser to (and only to): PDFs of the readings, a personal quotation bank they previously uploaded, an outlining document and the essay instructions (which students were given at least a week before so they had time to begin thinking through their topic).

On Day 1 in class, students enter a Canvas essay-question quiz through Lockdown Browser with links to the resources mentioned above (each of which opens in a new tab that students can access while writing). They spend the class period outlining/writing and hit “submit” at the end of the session.

Between the Day 1 and Day 2 writing sessions, students can read their writing on Canvas (so they can continue thinking about the topic) but are prevented from being able to edit it. If you’re worried about students relying on ChatGPT for ideas to try to memorize/regurgitate (I don’t know how worried we should be about students inevitably trying this), consider introducing small wrinkles to the essay instructions during the in-class sessions (e.g. “your essay must somewhere critically discuss this example”).

On Day 2, students come to class and can pick back up right from where they left off.

A Day 2 session looks like this:

One can potentially repeat the process for a third session. I had my 75-minute classes take two days and my 50-minute classes take three days for a roughly 700-word essay.

This format gives students access to everything we want them to have access to while working on their essays and nothing else. While it took lots of troubleshooting to develop the setup (links behave quite differently across operating systems!), this new assignment model offers an important direction worthy of serious exploration.

I have found that this setup preserves much of what we care about most with out-of-class writing assignments: Students can think hard about the topic over an extended period of time, they can make up their minds on some topic through the process of sustained critical reflection and they experience the benefits and rewards of working on a project, stepping away from it and returning to it (while thinking hard about the topic in the background all the while).

Indeed, I have talked with several students who noted that they ended up changing their minds on their topic between Day 1 and Day 2—they (for instance) set out to object to some view, and then they realized (after working hard through the objection on Day 1 and reflecting on it) that what they now wanted to do was defend the original view against the objection that they had developed. Perfect: This is exactly the kind of experience I have always wanted students to have when writing essays (and it’s an experience that students don’t get with a one-day blue-book essay exam).

Because the setup documents each day’s work, it invites wonderful opportunities for students to reflect on their writing process (what are they seeing themselves prioritizing each session, and how/why might they change their approach?). The opportunities for peer review at different stages are also robust.

For those interested, I have made a long (but time-stamped) video that illustrates and explains step by step how to build the assignment in Canvas (it also discusses troubleshooting steps for when a device isn’t getting into Lockdown Browser). The video assumes very minimal knowledge of Canvas and Lockdown Browser, and it describes the very specific ways to hyperlink everything so that students aren’t bumped out of the assignment or given access to external resources (in Canvas—I cannot currently speak to other LMS platforms). The basic technical setup for the assignment is this:

- Create a Canvas quiz for Day 1, create an essay question, link to resources in the question (PDFs must be uploaded with the “Preview Inline” display option to work across devices), require a Lockdown Browser with a password to access it, then publish the quiz.

- Post arbitrary, weightless grades for Day 1 after the first writing session so that students can read (but not edit) what they wrote before Day 2 (students cannot read their submitted work until you post some grade for it).

- Create a Canvas quiz for Day 2 just like Day 1, but this time, in the essay question, link to the Day 1 Canvas quiz (select “external link” rather than “course link,” and copy/paste the Day 1 Canvas quiz link).

As I mentioned at the beginning of this piece, a successful humanities course helps students cultivate critical, personally enriching and widely applicable skills, and it immerses them in the exploration of perspectives, ideas and modes of thought that can illuminate, challenge and inform their own outlooks. The research I have done over the past three years tells me I can no longer be confident that an intro-level course that nontrivially relies on out-of-class writing assignments can be a fully successful humanities course so understood. Yet a humanities course that fully abandons sustained essay assignments deprives students of the experience that best positions them to fully exercise and develop the skills most central to our disciplines. Something in the direction of this multiday in-class Lockdown Browser essay assignment is worthy of serious consideration.

-

3 Questions for Karina Kogan of Education Dynamics

In the small world of higher education, Karina Kogan and I know many of the same people. An introduction from one of these colleagues got Karina and me talking, and out of those conversations came this Q&A. I asked if Karina would be willing to share some thoughts about her company, EducationDynamics; her role (VP of partnership development); and her career advice for other aspiring leaders in her industry.

Q: How does EducationDynamics work with colleges and universities for online and other academic programs? Where does EducationDynamics fit into the ecosystem of companies that partner with universities?

A: That’s a great question and one I love answering, because this space is full of players, but not all of them are moving higher education forward. At EducationDynamics, we focus on the institutions that are ready to take bold steps forward. Not just in marketing or enrollment, but in how they grow strategically, strengthen their brand and generate revenue in a way that’s built to last.

We want to help institutions stop doing what they’ve always done. We need to serve modern learners, which takes a fundamentally different approach. These students are focused on cost, convenience and career outcomes. They’re not influenced by tradition alone and they don’t respond to disconnected efforts that don’t reflect who they are or where they’re headed.

That’s why we start with research. We don’t push a standard playbook or a prebuilt product. We look at the market, the student behavior, the school’s position, and we use those insights to build strategy that aligns with their enrollment priorities while also reinforcing the institution’s brand and reputation. Because those things are not separate anymore. A school’s ability to grow its revenue is directly connected to how it is perceived in the market. If your brand lacks clarity or credibility, students move on.

Over the past few years, we’ve seen how much student expectations have changed. They’re more in control of their academic path than ever and they defy outdated categories like traditional or nontraditional. At the same time, reputation has become a major factor in their decision-making. That’s why, in 2024, we brought the RW Jones Agency into the EDDY family. They’ve built a national reputation for helping institutions shape perception, elevate their voice and lead through complexity. Bringing that expertise into our ecosystem has allowed us to connect performance with purpose in a way that’s truly differentiated.

And we don’t just build the plan. We execute. We run the campaigns, manage enrollment outreach and support student engagement. It’s a full life cycle approach from awareness to enrollment to retention with everything working together and accountable to outcomes. That’s what it takes to grow in today’s market. It’s not about lead volume alone. It’s about attracting the right students, setting the right expectations and making sure the institution delivers on its promise.

As for where we fit in the ecosystem? Well … honestly, we don’t fit the mold and we’re not trying to and that’s intentional. Most institutions are still working with a handful of disconnected partners, each focused on one piece of the puzzle. That model no longer works. We bring brand, communications, marketing and enrollment strategy together, because when those areas are aligned, the institution grows in a way that’s both measurable and meaningful.

I’ve been in higher ed for more than 20 years, and this moment feels different. There’s real urgency, but also real openness to change. Our new CEO, Brent Ramdin, has brought a clear and future-facing vision that’s aligned our team and elevated our work. He understands where this sector is headed and what it will take to succeed there.

Q: Tell us about your role at EducationDynamics. What are your primary responsibilities and accountabilities? What career path brought you to your current leadership position within the company?

A: I serve as vice president of partnerships at EducationDynamics, and in many ways, it’s the role I’ve spent my entire career building toward. My focus is on developing strong, strategic relationships with colleges and universities across the country where I work closely with institutional leaders and our internal teams to craft unique solutions that help schools not only meet but exceed their enrollment goals.

It’s a highly collaborative role and one that demands both strategic insight and real operational follow-through. Every institution is different, so the work is never one-size-fits-all. I spend my time listening deeply, understanding the nuances of each partner’s challenges and helping shape the path forward, always with the modern learner in mind.

My career in higher ed started more than 20 years ago at the University of Phoenix, where I held several leadership roles over the course of more than a decade while simultaneously expanding my leadership development through structured curriculum. That experience gave me a strong foundation in enrollment strategy, team leadership and cross-functional execution. From there, I served as chief partnerships officer at a division of Excelsior University, where I helped institutions launch and scale their first online programs.

That’s what ultimately led me to EducationDynamics, and the transition from the institutional side to the partner side has been both natural and energizing. I understand what it feels like to be inside an institution navigating change, and that perspective helps me show up as a true partner to the schools we work with today.

Q: What advice do you have for early and midcareer professionals interested in eventually moving into a leadership role in a for-profit company in the higher education and digital marketing spaces?

A: I always tell people to be deliberately curious. So, one of the biggest pieces of advice I can offer is this: Develop a deep understanding of both the mission of higher ed and the mechanics of the business side of institutions. The sweet spot in this space, especially in leadership, is being able to translate institutional goals into scalable, market-responsive strategies. That takes more than just technical skill. It takes empathy, agility and a strong sense of purpose.

If you’re coming from the university side, spend time learning how businesses that serve higher ed operate—how they measure success, how they use data, how decisions get made. And if you’re coming from the corporate side, take the time to understand the culture, values and pace of higher education. The people who lead effectively in this space are the ones who can bridge those two worlds with credibility and clarity.

Also, be proactive about expanding your perspective. Step outside your job function. Learn the language of marketing, enrollment, analytics, finance, because in a leadership role, you’ll need to connect all those dots. What’s key for me is I don’t focus on being the expert in the room; I focus on being useful.

I’ve also found that relationships are everything. Build your network early, nurture it often and don’t be afraid to show up for others before you need anything in return. Platforms like LinkedIn have made that easier than ever, but the real value is in the follow-up, the conversations and the genuine connections.

And finally: Get close to the work. The best leaders I know are the ones who stay connected to the impact their work has on students, on institutions and on outcomes that actually matter. That connection is what makes the hard work worth it, and it’s what keeps your leadership grounded in purpose.

So, if I had to sum it up: Keep learning, look for ways to be of service and stay connected. That mindset opens doors, builds trust and prepares you for leadership in any space, especially in one as dynamic as this one.

-

Beyond Rankings: Redefining University Success in the AI-Era

- By Somayeh Aghnia, Co-Founder and Chair of the Board of Governors at the London School of Innovation.

University rankings have long been a trusted, if controversial, proxy for quality. Students use them to decide where to study. Policymakers use them to shape funding. Universities use them to benchmark themselves against competitors. But in an AI-powered world, are these rankings still measuring what matters?

If we’ve learned anything from the world of business over the last decade, it’s this: measuring the wrong things can lead even the most successful organisations astray. The tech industry, in particular, has seen numerous examples of companies optimising for vanity metrics (likes, downloads, growth at all costs) only to realise too late that these metrics didn’t align with real value creation.

The metrics we choose to measure today will shape the universities we get tomorrow.

The Problem with Today’s Rankings

Current university ranking systems, whether national or global, tend to rely on a familiar set of indicators:

- Research volume and citations

- Academic and employer reputation surveys

- Faculty-student ratios

- International staff and student presence

- Graduate salary data

- Student satisfaction and completion rates

While these factors offer a snapshot of institutional performance, they often fail to reflect the complex reality of the world. A university may rise in the rankings even as it fails to respond to student needs, workforce realities, or societal challenges.

For example, graduate salary data may tell us something about economic outcomes, but very little about the long-term adaptability or purpose-driven success of those graduates or their impact on improving society. Research citations measure academic influence, but not whether the research is solving real-world problems. Reputation surveys tend to reward legacy and visibility, not innovation or inclusivity.

In short, they anchor universities to a model optimised for the industrial era, not the intelligence era.

Ready for the AI paradigm?

Artificial Intelligence is a paradigm shift that is changing what we value in all aspects of life including education, especially higher education, how we define learning, what we want as an outcome, and how we measure success.

In a world where knowledge is increasingly accessible, and where intelligent systems can generate information, summarise research, and tutor students, the role of a university shifts from delivering knowledge or developing skills to curating learning experiences focusing on developing humans’ adaptability, and preparing students, and society, for uncertainty.

This means the university of the future must focus less on scale, tradition, and prestige, and more on relevance, adaptability, and ethical leadership. These are harder to measure, but far more important.

This demands a new value system. And with that, a new approach to how we assess institutional success.What Should We Be Measuring?

As we rethink what universities are for, we must also rethink how we assess their impact. Inspired by the “measure what matters” philosophy from business strategy, we need new metrics that reflect AI-era priorities. These could include:

1. Adaptability: How quickly and responsibly does an institution respond to societal, technological, and labour market shifts? This could be measured by:

- Curriculum renewal cycle: Time between major curriculum updates in response to new tech or societal trends.

- New programme launches: Number and relevance of AI-, climate-, or digital economy-related courses introduced in the last 3 years.

- Agility audits: Internal audits of response times to regulatory or industry change (e.g., how quickly AI ethics is integrated into professional courses).

- Employer co-designed modules: % of programmes co-developed with industry or public sector partners.

2. Student agency: Are students empowered to shape their own learning paths, engage with interdisciplinary challenges, and co-create knowledge? This could be measured by:

- Interdisciplinary enrolment: % of students engaged in flexible, cross-departmental study pathways.

- Student-designed modules/projects: Number of modules that allow student-led curriculum or research projects.

- Participation in governance: % of students involved in academic boards, curriculum design panels, or innovation hubs.

- Satisfaction with personalisation: Student survey responses (e.g., NSS, internal pulse surveys) on flexibility and autonomy in learning.

3. AI and digital literacy: To what extent are institutions preparing their staff and their graduates for a world where AI is embedded in every profession? This could be measured by:

- Curriculum integration: % of degree programmes with AI/digital fluency embedded as a learning outcome.

- Staff development: Hours or participation rates in AI-focused CPD for academic and support staff.

- AI usage in teaching and assessment: Extent of AI-enabled platforms, feedback systems, or tutors in active use.

- Graduate outcomes: Employer feedback or destination data reflecting readiness for digital-first/AI-ready roles.

4. Contribution to local and global challenges: Are research efforts aligned with pressing societal needs amplified with advancements of AI such as social justice, or the AI divide? This could be measured by:

- UN SDG alignment: % of research/publications mapped to UN Sustainable Development Goals.

- AI-for-good projects: Number of AI projects tackling societal or environmental issues.

- Community partnerships: Active partnerships with local authorities, civic groups, or NGOs on social challenges.

- Policy influence: Instances where university research or expertise shapes public policy (e.g. citations in white papers or select committees).

5. Wellbeing and belonging: How well are staff and students supported to thrive, not just perform, within the institution? This could be measured by:

- Staff/student wellbeing index: Use of validated tools like the WEMWBS (Warwick-Edinburgh Mental Wellbeing Scale) in internal surveys.

- Use of support services: Uptake and satisfaction rates for mental health, EDI, and financial support services.

- Sense of belonging scores: Survey data on inclusion, psychological safety, and campus climate.

- Staff retention and engagement: Turnover data, satisfaction from staff pulse surveys, or exit interviews.

These are not soft metrics. They are foundational indicators of whether a university is truly fit for purpose in a volatile and AI-transformed world. You could call this a “University Fitness for Future Index”, a system that doesn’t rank but reveals how well an institution is evolving, and as a result its academics, staff and students are adapting to a rapidly changing world.

From Status to Substance

Universities must now face the uncomfortable but necessary task of redefining their identity and purpose. Those who focus solely on preserving status will struggle. Those who embrace the opportunity to lead with substance – authenticity, impact, innovation – have a chance to thrive.

AI will not wait for the sector to catch up. Students, staff, employers, and communities are already asking deeper questions: Does this university prepare me for an unpredictable future? Does it care about the society I will enter after graduation? Is it equipping us to lead with courage and ethics in an AI-powered world?

These are the questions that matter. And increasingly, they should be the ones that will shape how institutions are evaluated, regardless of their position in the league tables.

It’s time we evolve our frameworks to reflect what really counts, that increasingly will be defined by usefulness, purpose, and trust.

A Call for Courage

We are not simply in an era of change. We are in a change of era.

If we are serious about preparing our learners, and our society, for a world defined by intelligent systems, we must also be serious about redesigning the system that educates them.

That means shifting from prestige to purpose. From competition to contribution. From reputation to relevance.

Because the institutions that will lead the future are not necessarily those that top today’s rankings.

They are the ones willing to ask: what truly matters now and are we brave enough to measure it?

-

For some, the heat is an access issue

When you think about the accessibility and inclusivity of our learning and working environments, does temperature come to mind?

Discussions about temperature can be complicated because they are quickly confused with preference, meaning that by raising the issue someone risks being viewed as selfish or fussy.

But let’s think of this another way for a moment. I love nuts – others might not like them. But still others are allergic to nuts and could be made seriously ill by them.

The same principle is true about temperature – you might have a preference for warmer or cooler temperatures, but only at extreme levels would this preference become a health issue.

But for colleagues and students with a wide range of health conditions, even small temperature changes are a health issue.

Temperature as an EDI issue

I am surprised how hard it is to find information that openly discusses temperature as an EDI issue. There is widespread information discussing employers’ legal responsibility to provide a safe working temperature – articles about the harmful effects of extreme temperature on health and the likelihood of this increasing due to climate change.

However, discussions about how smaller workplace temperature changes can have a disabling effect now is generally hidden on pages relevant to specific groups or health conditions.

By smaller temperature changes, I am referring to apparently inconsequential things like walking, moving between spaces (e.g. outside to inside or between rooms) or the crowded rooms.

Many people would adapt to these situations automatically e.g. taking off a jumper. However, for others these small everyday increases or decreases in temperature require planning, and can cause anxiety and significant discomfort or health impact.

Menopause awareness discussions are leading the way in voicing the impact of workplace temperature and employer responsibility. Research highlights the prevalence of heat-related issues linked to menopause and the importance of the ability to control local temperature to help manage symptoms in the work environment.

Significantly, however, studies also voice the shame individuals encounter in living through this normal and widespread experience in the workplace:

I spent most of my time when I used to work with my head in a fan and colleagues laughing at my hot flushes. It was too hot in the office for me and I felt hot sweaty and embarrassed all the time.

However, menopause is far from the only reason a small temperature change might have a significant impact on health and wellbeing. Many health conditions are also affected.

The correlation between temperature and exacerbation of symptoms is perhaps particularly unsurprising with multiple sclerosis – before MRI scanners, observing a patient’s functioning in a hot bath was a key part of the diagnostic process.

Likewise, the MS Trust states that 60-80 per cent of people with MS find symptoms worsen with even small changes in temperature. As Jennifer Powell succinctly puts it:

Heat is kryptonite to anyone with multiple sclerosis.

What exactly does ‘worsening symptoms’ mean for someone with MS? It might include a deterioration in mobility, balance, vision, and brain functioning:

“Heat makes my nervous system act a bit like a computer with a broken cooling fan. First it acts a bit strange, then programs start crashing and then you get the dreaded ‘blue screen of death’ when all you can do is switch off for a while then start all over again when things have cooled down.

It may also trigger or exacerbate nerve pain ranging from itching or numbness to stabbing or electric shock sensations. This is a far cry from preference.

But again, MS is not the only health condition affected by small changes in heat. When you start to scratch the surface, the range of conditions that may be affected is startling. They include circulatory, rheumatological (e.g. Lupus, rheumatoid arthritis), mental health, neurological (e.g. spinal damage) and neurodevelopmental (e.g. autism) conditions.

Sometimes it is the treatment, rather than condition itself, that causes difficulties with temperature regulation.

As well as the chemotherapy causing difficulties with temperature regulation, Rebekah Hughes describes how it triggered early menopause. She also raises the important point that hot flushes affect individuals differently – for some they might be barely noticeable, for others they severely impact daily life. We need to allow space for differences in individual experience.

What is the cost of ignoring this issue?

From the discussion so far, we can clearly see that temperature affects some staff and students’ experience of normal day-to-day work and study, and impacts their health, wellbeing and sense of belonging. It may also impact performance in high-stakes events.

Typical academic high-stakes events include assessed presentations, interviews, conferences and exams. They often cause temporary stress, which may cause small increases in body temperature.

Individuals usually have reduced personal control to make their own adaptations in these contexts. This raises important questions about the inclusivity of our assessment, recruitment and professional development opportunities. These activities are gatekeeping moments in an individual’s academic and professional journey. However, there is a strong case that the activities and the environments in which they take place may have an unrecognised, yet substantial and possibly disabling, impact on some due to hidden temperature factors.

Next steps?

We might be left thinking that this is an impossible situation – some people need warmer working conditions, others cooler. We might be afraid to start a conversation about temperature for fear of opening a can of worms. However, we do well to remember that some individuals are affected by multiple health conditions – some that make them susceptible to heat, others that make them susceptible to cold. They have to find a way to manage this complexity, and if we are committed to EDI, we must too.

Moreover, the first step is not to try and jump to a quick-fix solution. Instead, we simply need to be aware that this hidden issue might be affecting a surprising number of our students and colleagues.

We need to continue to develop a compassionate campus culture where colleagues and students feel safe to share the challenges they face and the strategies that help, and a space where they will be heard.

-

Defunding level 7 apprenticeships in health and care may backfire on lower levels

Well, it finally happened. Level 7 apprenticeship funding will disappear for all but a very limited number of younger people from January 2026.

The shift in focus from level 7 to funding more training for those aged 21 and under seems laudable – and of course we all want opportunities for young people – but will it solve or create more problems for the health and social care workforce?

The introduction of foundation apprenticeships, aimed at bringing 16- to 21-year-olds into the workforce, includes health and social care. Offering employer incentives should be a good thing, right?

Care is not merely a job

Of course we need to widen opportunities for careers in health and social care, one of the guaranteed growth industries for the foreseeable future regardless of the current funding challenges. But the association of foundation apprenticeships with those not in education, employment or training (NEETs) gives the wrong impression of the importance of high-quality care for the most vulnerable sectors of our society.

Delivering personal care, being an effective advocate, or dealing with challenging behaviours in high pressured environments requires a level of skill, professionalism and confidence that should not be incentivised as simply a route out of unemployment.

Employers and education providers invest significant time and energy in crafting a workforce that can deliver values-based care, regardless of the care setting. Care is not merely a job: it’s a vocation that needs to be held in high esteem, otherwise we risk demeaning those that need our care and protection.

There are already a successful suite of apprenticeships leading to careers in health and social care, which the NHS in particular makes good use of. Social care providers (generally smaller employers) report challenges in funding or managing apprenticeships, but there are excellent examples of where this is working well.

So, do we need something at foundation level? How does that align with T level or level 2 apprenticeship experiences? If these pathways already exist and numbers are disappointing, why bring another product onto the market? And are we sending the correct message to the wider public about the value of careers in health and social care?

Career moves

The removal of funding for level 7 apprenticeships serves as a threat to the existing career development framework – and it may yet backfire on foundation or level 2 apprenticeships. The opportunity to develop practitioners into enhanced or advanced roles in the NHS is not only critical to the delivery of health services in the future, but it also offers a career development and skills escalator mechanism.

By removing this natural progression, the NHS will see role stagnation – which threatens workforce retention. We know that the opportunity to develop new skills or move into advanced roles is a significant motivator for employees.

If senior practitioners are not able to move up, out or across into new roles, how will those entering at lower levels advance? Where are the career prospects that the NHS has spent years developing and honing? Although we are still awaiting the outcome of the consultation around the 10-year plan – due for publication this week with revisions to the long-term workforce plan to follow – I feel confident in predicting that we will need new roles or skill sets to successfully deliver care.

So, if no development is happening through level 7 apprenticeships, where is the money going to come from? The NHS has been suggesting that there will be alternative funding streams for some level 7 qualifications, but this is unlikely to offer employers the flexibility or choice they had through the levy.

Could level 6 be next?

Degree apprenticeships at level 6 have also come in for some criticism about the demographics of those securing apprenticeship opportunities and how this has impacted opportunities for younger learners – an extrapolation of the arguments that were made against level 7 courses.

Recent changes to the apprenticeship funding rules, requirements of off the job training and the anticipated changes to end-point assessment could lead to pre-registration apprenticeships in nursing and allied health being deemed no longer in line with the policy intent because of the regulatory requirements associated with them.

The workforce plan of 2023 outlined the need for significant growth of the health and social care workforce, an ambition that probably is still true although how and when this will happen may change. Research conducted by the University of Derby and University Alliance demonstrated some of the significant successes associated with apprenticeship schemes in the NHS, but also highlighted some of the challenges. Even with changes to apprenticeship policy, these challenges will not disappear.

Our research also highlighted challenges associated with the bureaucracy of apprenticeships, the need for stronger relationships between employers and providers, flexibility in how the levy is used to build capacity and how awareness of the apprenticeship “brand” needs to be promoted.

A core feature of workforce development

The security of our future health and social care workforce lies in careers being built from the ground up, regardless of whether career development is funded by individuals themselves or via apprenticeships. However, the transformative nature of apprenticeships, the associated social mobility, the organisational benefits and the drive to deliver high quality care in multiple settings means that we should not be quick to walk further away from the apprenticeship model.

Offering apprenticeships at higher (and all) academic levels is critical to delivering high quality care and encouraging people to remain engaged in the sector.

So, as Skills England start to roll out change, it is crucial that both the NHS and higher education remain close to policymakers, supporting and challenging decisions being made. While there are challenges, these can be overcome or worked through. The solutions arrived at may not always be easy, but they have to be evidence-based and fully focused on the need to deliver a health and social care workforce of which the UK can be proud.

-

Harvard “Indifference” to Jewish Students Violates Law

The Health and Human Services Department announced Monday that Harvard University’s “deliberate indifference” regarding discrimination against Jewish and Israeli students violates federal law.

The HHS Office for Civil Rights said Harvard is violating Title VI of the Civil Rights Act of 1964, which prohibits discrimination based on shared ancestry, including antisemitism.

The finding, similar to one HHS announced against Columbia University in May, adds to the Trump administration’s pressure on both Ivy League institutions to comply with its demands. It has already cut off billions in federal funding.

HHS’s Notice of Violation says that a report from Harvard’s own Presidential Task Force on Combating Antisemitism and Anti-Israeli Bias, combined with other sources, “present a grim reality of on-campus discrimination that is pervasive, persistent, and effectively unpunished.”

“Reports of Jewish and Israeli students being spit on in the face for wearing a yarmulke, stalked on campus, and jeered by peers with calls of ‘Heil Hitler’ while waiting for campus transportation went unheeded by Harvard administration,” the Notice of Violation says.

In a statement, Harvard said it is “far from indifferent on this issue and strongly disagrees with the government’s findings.”

“In responding to the government’s investigation, Harvard not only shared its comprehensive and retrospective Antisemitism and Anti-Israeli Bias Report but also outlined the ways that it has strengthened policies, disciplined those who violate them, encouraged civil discourse, and promoted open, respectful dialogue,” the statement said.

In April, the federal government ordered Harvard to audit academic “programs and departments that most fuel antisemitic harassment or reflect ideological capture” and report faculty “who discriminated against Jewish or Israeli students or incited students to violate Harvard’s rules” after the Oct. 7, 2023, start of the ongoing Israel-Hamas war. The government also ordered Harvard to, among other things, stop admitting international students “hostile to the American values and institutions inscribed in the U.S. Constitution and Declaration of Independence, including students supportive of terrorism or anti-Semitism.”

“HHS stands ready to reengage in productive discussions with Harvard to reach resolution on the corrective action that Harvard can take,” HHS Office for Civil Rights director Paula M. Stannard said in a news release.

-

What Legacy Vendors Won’t Tell You About Accreditation Readiness

30% of Institutions Are Not Accreditation Ready — Is Yours Falling Behind?

Nearly 1 in 3 higher education institutions struggle to meet accreditation standards — not because of academic shortcomings, but because they lack true accreditation readiness.

The pressure on QA Directors and Accreditation Heads has never been higher. Regulatory expectations are rising. Documentation demands are expanding. And legacy systems? They’re making it worse — with scattered data, manual tracking, and zero real-time visibility.

Readiness is no longer optional. It’s a year-round necessity. In this blog, we expose what legacy vendors won’t tell you — and what forward-thinking institutions are doing to stay compliant, connected, and confidently audit-ready.

Key Takeaways

- Accreditation readiness means real-time, year-round preparedness — not last-minute chaos.

- Legacy tools create silos, delays, and compliance risks.

- Modern systems support:

- Workflow automation

- Curriculum mapping

- Faculty credential tracking

- Institutions that modernize stay audit-ready and aligned with accreditors.

Why Accreditation Readiness Matters More Than Ever

What is accreditation readiness, and why is it important?

Accreditation readiness refers to an institution’s ability to maintain full, ongoing compliance with accreditor standards — not just during evaluation windows, but all year round. It means that your documentation, outcomes, faculty credentials, and curriculum alignment are always audit-ready, accessible, and defensible.

Why this matters now:

- Accreditors want more reports, greater proof, and clearer learning outcomes on a regular basis.

- There is more pressure from regulators. Each group, from CHED and PAASCU to ABET and AUN-QA, has its own set of changing standards.

- The faculty are quite busy. Keeping track of credentials, exams, and program goals by hand makes people more tired.

- Old systems can’t keep up. Tools and spreadsheets that are kept in separate places don’t have visibility, automation, or built-in support for compliance.

Institutions that treat accreditation as an “every few years” project are exposed to delays, rejections, and lost funding. But those with modern systems in place for accreditation workflow automation, curriculum mapping, and faculty credential tracking are equipped to respond instantly, with confidence.

Looking to align outcomes with accreditation standards? Explore our Outcome-Based Education Software.

The Hidden Flaws in Legacy Accreditation Systems

What are the limitations of legacy accreditation systems?

Most traditional accreditation systems were built for a different era — one with fewer programs, simpler standards, and slower timelines. Today, those same systems are liabilities. Here’s what QA Directors and Accreditation Heads face when relying on outdated tech:

What legacy systems don’t tell you:

- No real-time visibility. You chase files instead of monitoring readiness on a dashboard.

- Faculty data, outcomes, and evidence are scattered in error-prone spreadsheets.

- No automation—workflows, reminders, audit logs? Your work is manual.

- Evidence is scattered in inboxes, shared folders, and obsolete systems.

- Compliance guesswork—No standards mapping or role-based controls implies memory, not process.

Legacy tools make you work harder to keep up, not ready.

Modern institutions need more than storage — they need a college accreditation system that actively supports continuous readiness, not just static compliance.

How Accreditation Gets Delayed — And Why

Even well-run institutions miss deadlines — not because of lack of quality, but because their systems fail them.

What causes delays or failures in accreditation audits?

Most accreditation delays don’t happen at the last mile — they happen months before, in the day-to-day workflows that no one’s watching. Here’s how it breaks down:

Key reasons accreditation gets delayed:

- Evidence is incomplete or outdated. Faculty credential tracking, course assessments, and program reviews aren’t updated regularly — so you scramble when auditors request proof.

- Stakeholders aren’t aligned. QA teams, deans, and faculty operate in silos. Without a unified accreditation management system, responsibilities fall through the cracks.

- Curriculum data doesn’t align with outcomes. When you don’t have built-in curriculum mapping for accreditation, proving outcome achievement becomes a manual and inconsistent task.

- No audit trail. Legacy systems don’t offer version control, timestamped approvals, or centralized workflows — which leads to missing context during audits.

- Everything is reactive. Institutions focus on audit prep only when the review date is near — not realizing that accreditation readiness requires year-round activity and automation.

- Delays aren’t just inconvenient — they damage institutional credibility and burden your QA teams with avoidable stress. An intelligent, automated accreditation software helps you stay one step ahead, not one step behind.

What Modern Vendors Offer That Legacy Vendors Don’t

To be honest, most old systems weren’t made to work with today’s accrediting needs.

They can’t keep up with changing standards, more paperwork, and the stress that QA teams are under from many campuses and accrediting authorities.

Accreditation management systems today are not the same. They are made to be improved all the time, not just once. They don’t only keep data; they also let you keep track of it in real time.

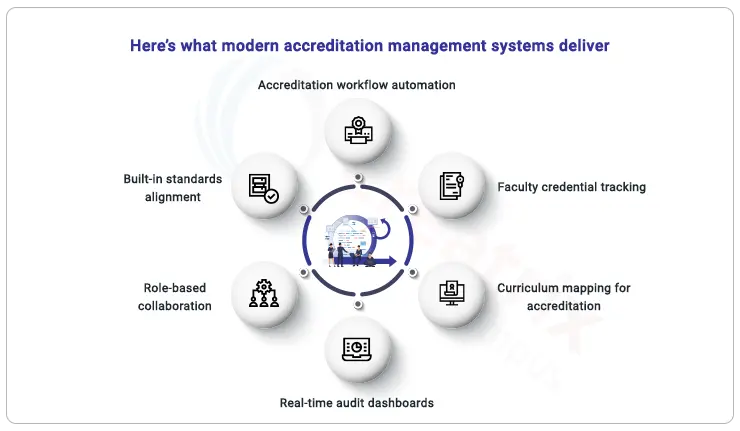

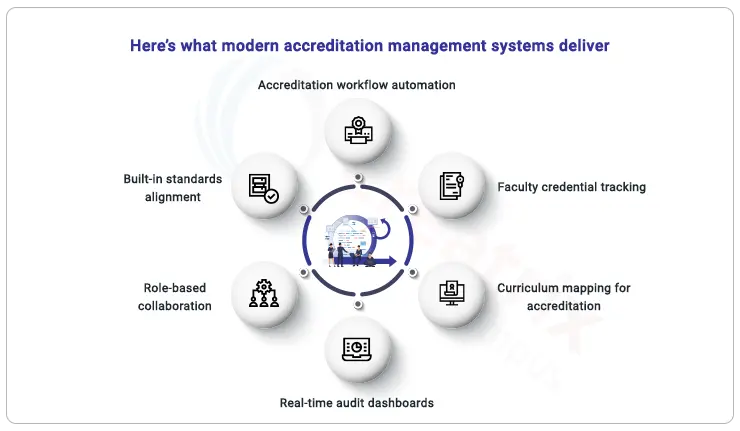

Here’s what modern accreditation management systems deliver:

- Automate accreditation workflow by triggering activities, approvals, and deadline alerts to avoid mistakes.

- Centralize qualifications, certifications, and teaching assignments to ensure teachers satisfy program standards across cycles.

- Curriculum mapping for accreditation shows compliance by seamlessly linking learning outcomes to courses, assessments, and standards.

- Live audit dashboards for program, department, and standard accreditation preparation with fast evidence.

- Managed access and verifiable updates help QA teams, deans, and faculty collaborate.

- Built-in standards alignment—CHED, PAASCU, ABET, AUN-QA—mapped and monitored.

Modern platforms are strategic pieces for compliance, quality assurance, and institutional growth, not just upgrades.

Want consistent curriculum, outcomes, and standards? Visit our Curriculum Mapping Tools.

Simplify faculty evaluations and credential tracking? Explore our Faculty Management System.

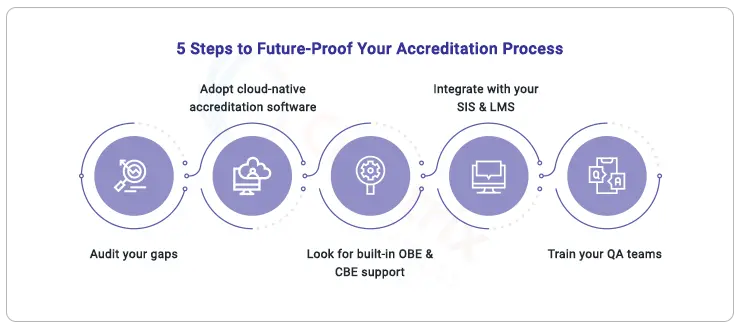

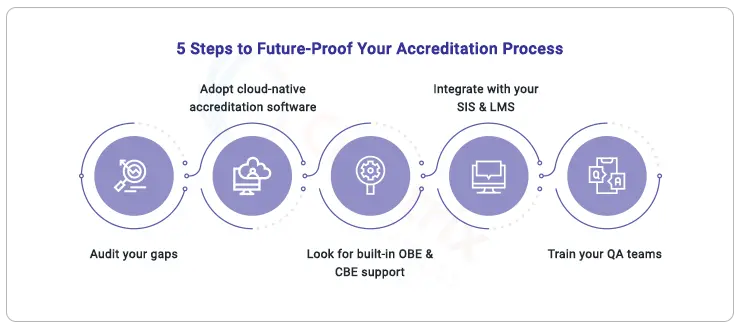

How to Future-Proof Your Accreditation Process

How can institutions upgrade from legacy to modern accreditation systems?

- Audit your gaps—Find old systems that delay or require manual work in your accreditation process.

- Use cloud-native accreditation software Choose a safe, scalable higher education accrediting solution.

- Built-in OBE/CBE support Align with CHED, ABET, PAASCU outcomes and standards.

- Integrate SIS/LMS – Make accreditation automation seamless and avoid redundancy.

- Train QA teams Give staff tools for faculty credential tracking, task management, and real-time insights.

Final Thoughts — Don’t Let Legacy Hold You Back

What’s the risk of continuing with legacy systems for accreditation?

Using old tools is not only a waste of time, it’s also dangerous. Missed deadlines, failed audits, not following the rules, and QA teams that are too busy are only the beginning.

Legacy systems were made for reporting that doesn’t change. But to be ready for accreditation today, every program, campus, and accreditor needs to be flexible, visible, and always in sync.

Modern universities are going forward with integrated, automated, and standards-aligned accreditation management systems because they have to, not because they want to.

Don’t wait till the next audit to find the holes. Get Creatrix Campus’s AI rich accreditation system before your old one slows you down

-

What Legacy Vendors Won’t Tell You

30% of Institutions Are Not Accreditation Ready — Is Yours Falling Behind?

Nearly 1 in 3 higher education institutions struggle to meet accreditation standards — not because of academic shortcomings, but because they lack true accreditation readiness.

The pressure on QA Directors and Accreditation Heads has never been higher. Regulatory expectations are rising. Documentation demands are expanding. And legacy systems? They’re making it worse — with scattered data, manual tracking, and zero real-time visibility.

Readiness is no longer optional. It’s a year-round necessity. In this blog, we expose what legacy vendors won’t tell you — and what forward-thinking institutions are doing to stay compliant, connected, and confidently audit-ready.

Key Takeaways

- Accreditation readiness means real-time, year-round preparedness — not last-minute chaos.

- Legacy tools create silos, delays, and compliance risks.

- Modern systems support:

- Workflow automation

- Curriculum mapping

- Faculty credential tracking

- Institutions that modernize stay audit-ready and aligned with accreditors.

Why Accreditation Readiness Matters More Than Ever

What is accreditation readiness, and why is it important?

Accreditation readiness refers to an institution’s ability to maintain full, ongoing compliance with accreditor standards — not just during evaluation windows, but all year round. It means that your documentation, outcomes, faculty credentials, and curriculum alignment are always audit-ready, accessible, and defensible.

Why this matters now:

- Accreditors want more reports, greater proof, and clearer learning outcomes on a regular basis.

- There is more pressure from regulators. Each group, from CHED and PAASCU to ABET and AUN-QA, has its own set of changing standards.

- The faculty are quite busy. Keeping track of credentials, exams, and program goals by hand makes people more tired.

- Old systems can’t keep up. Tools and spreadsheets that are kept in separate places don’t have visibility, automation, or built-in support for compliance.

Institutions that treat accreditation as an “every few years” project are exposed to delays, rejections, and lost funding. But those with modern systems in place for accreditation workflow automation, curriculum mapping, and faculty credential tracking are equipped to respond instantly, with confidence.

Looking to align outcomes with accreditation standards? Explore our Outcome-Based Education Software.

The Hidden Flaws in Legacy Accreditation Systems

What are the limitations of legacy accreditation systems?

Most traditional accreditation systems were built for a different era — one with fewer programs, simpler standards, and slower timelines. Today, those same systems are liabilities. Here’s what QA Directors and Accreditation Heads face when relying on outdated tech:

What legacy systems don’t tell you:

- No real-time visibility. You chase files instead of monitoring readiness on a dashboard.

- Faculty data, outcomes, and evidence are scattered in error-prone spreadsheets.

- No automation—workflows, reminders, audit logs? Your work is manual.

- Evidence is scattered in inboxes, shared folders, and obsolete systems.

- Compliance guesswork—No standards mapping or role-based controls implies memory, not process.

Legacy tools make you work harder to keep up, not ready.

Modern institutions need more than storage — they need a college accreditation system that actively supports continuous readiness, not just static compliance.

How Accreditation Gets Delayed — And Why

Even well-run institutions miss deadlines — not because of lack of quality, but because their systems fail them.

What causes delays or failures in accreditation audits?

Most accreditation delays don’t happen at the last mile — they happen months before, in the day-to-day workflows that no one’s watching. Here’s how it breaks down:

Key reasons accreditation gets delayed:

- Evidence is incomplete or outdated. Faculty credential tracking, course assessments, and program reviews aren’t updated regularly — so you scramble when auditors request proof.

- Stakeholders aren’t aligned. QA teams, deans, and faculty operate in silos. Without a unified accreditation management system, responsibilities fall through the cracks.

- Curriculum data doesn’t align with outcomes. When you don’t have built-in curriculum mapping for accreditation, proving outcome achievement becomes a manual and inconsistent task.

- No audit trail. Legacy systems don’t offer version control, timestamped approvals, or centralized workflows — which leads to missing context during audits.

- Everything is reactive. Institutions focus on audit prep only when the review date is near — not realizing that accreditation readiness requires year-round activity and automation.

- Delays aren’t just inconvenient — they damage institutional credibility and burden your QA teams with avoidable stress. An intelligent, automated accreditation software helps you stay one step ahead, not one step behind.

What Modern Vendors Offer That Legacy Vendors Don’t

To be honest, most old systems weren’t made to work with today’s accrediting needs.

They can’t keep up with changing standards, more paperwork, and the stress that QA teams are under from many campuses and accrediting authorities.

Accreditation management systems today are not the same. They are made to be improved all the time, not just once. They don’t only keep data; they also let you keep track of it in real time.

Here’s what modern accreditation management systems deliver:

- Automate accreditation workflow by triggering activities, approvals, and deadline alerts to avoid mistakes.

- Centralize qualifications, certifications, and teaching assignments to ensure teachers satisfy program standards across cycles.

- Curriculum mapping for accreditation shows compliance by seamlessly linking learning outcomes to courses, assessments, and standards.

- Live audit dashboards for program, department, and standard accreditation preparation with fast evidence.

- Managed access and verifiable updates help QA teams, deans, and faculty collaborate.

- Built-in standards alignment—CHED, PAASCU, ABET, AUN-QA—mapped and monitored.

Modern platforms are strategic pieces for compliance, quality assurance, and institutional growth, not just upgrades.

Want consistent curriculum, outcomes, and standards? Visit our Curriculum Mapping Tools.

Simplify faculty evaluations and credential tracking? Explore our Faculty Management System.

How to Future-Proof Your Accreditation Process

How can institutions upgrade from legacy to modern accreditation systems?

- Audit your gaps—Find old systems that delay or require manual work in your accreditation process.

- Use cloud-native accreditation software Choose a safe, scalable higher education accrediting solution.

- Built-in OBE/CBE support Align with CHED, ABET, PAASCU outcomes and standards.

- Integrate SIS/LMS – Make accreditation automation seamless and avoid redundancy.

- Train QA teams Give staff tools for faculty credential tracking, task management, and real-time insights.

Final Thoughts — Don’t Let Legacy Hold You Back

What’s the risk of continuing with legacy systems for accreditation?

Using old tools is not only a waste of time, it’s also dangerous. Missed deadlines, failed audits, not following the rules, and QA teams that are too busy are only the beginning.

Legacy systems were made for reporting that doesn’t change. But to be ready for accreditation today, every program, campus, and accreditor needs to be flexible, visible, and always in sync.

Modern universities are going forward with integrated, automated, and standards-aligned accreditation management systems because they have to, not because they want to.

Don’t wait till the next audit to find the holes. Get Creatrix Campus’s AI rich accreditation system before your old one slows you down