It was my pleasure last week to deliver a mini-workshop at the Independent Schools of New Zealand Annual Conference in Auckland. Intended to be more dialogue than monologue, I’m not sure if it landed quite where I had hoped. It is an exciting time to be thinking about educational governance and my key message was ‘don’t get caught up in the hype’.

Understanding media representations of “Artificial Intelligence”.

We need to be wary of the hype around the term AI, Artificial Intelligence. I do not believe there is such a thing. Certainly not in the sense the popular press purport it to exist, or has deemed to have sprouted into existence with the advent of ChatGPT. What there is, is a clear exponential increase in the capabilities being demonstrated by computation algorithms. The computational capabilities do not represent intelligence in the sense of sapience or sentience. These capabilities are not informed by the senses derived from an organic nervous system. However, as we perceive these systems to mimic human behaviour, it is important to remember that they are machines.

This does not negate the criticisms of those researchers who argue that there is an existential risk to humanity if A.I. is allowed to continue to grow unchecked in its capabilities. The language in this debate presents a challenge too. We need to acknowledge that intelligence means something different to the neuroscientist and the philosopher, and between the psychologist and the social anthropologist. These semiotic discrepancies become unbreachable when we start to talk about consciousness.

In my view, there are no current Theory of Mind applications… yet. Sophia (Hanson Robotics) is designed to emulate human responses, but it does not display either sapience or sentience.

What we are seeing, in 2023, is the extension of both the ‘memory’, or scope of data inputs, into larger and larger multi-modal language models, which are programmed to see everything as language. The emergence of these polyglot super-savants is remarkable, and we are witnessing the unplanned and (in my view) cavalier mass deployment of these tools.

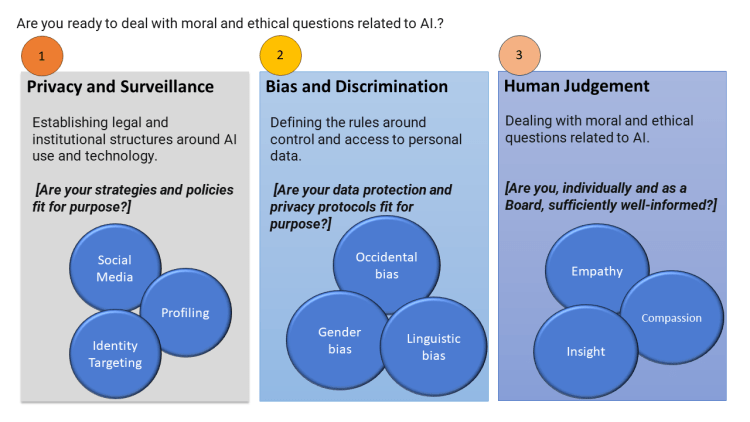

Ethical spheres for Governing Boards to reflect on in 2023

Ethical spheres for Governing Boards to reflect on in 2023Ethical and Moral Implications

Educational governing bodies need to stay abreast of the societal impacts of Artificial Intelligence systems as they become more pervasive. This is more important than having a detailed understanding of the underlying technologies or the way each school’s management decides to establish policies. Boards are required to ensure such policies are in place, are realistic, can be monitored, and are reported on.

Policies should already exist around the use of technology in supporting learning and teaching, and these can, and should, be reviewed to ensure they stay current. There are also policy implications for admissions and recruitment, selection processes (both of staff and students) and where A.I. is being used, Boards need to ensure that wherever possible no systemic bias is evident. I believe Boards would benefit from devising their own scenarios and discussing them periodically.