Key points:

I’ll admit that I use AI. I’ve asked it to help me figure out challenging Excel formulas that otherwise would have taken me 45 minutes and a few tutorials to troubleshoot. I’ve used it to help me analyze or organize massive amounts of information. I’ve even asked it to help me devise a running training program aligning with my goals and fitting within my schedule. AI is a fantastic tool–and that’s the point. It’s a tool, not a replacement for thinking.

As AI tools become more capable, more intuitive, and more integrated into our daily lives, I’ve found myself wondering: Are we growing too dependent on AI to do our thinking for us?

This question isn’t just philosophical. It has real consequences, especially for students and young learners. A recent study published in the journal Societies reports that people who used AI tools consistently showed a decline in critical thinking performance. In fact, “whether someone used AI tools was a bigger predictor of a person’s thinking skills than any other factor, including educational attainment.” That’s a staggering finding because it suggests that using AI might not just be a shortcut. It could be a cognitive detour.

The atrophy of the mind

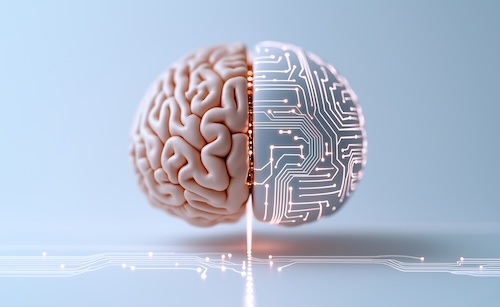

The term “digital dementia” has been used to describe the deterioration of cognitive abilities as a result of over-reliance on digital devices. It’s a phrase originally associated with excessive screen time and memory decline, but it’s found new relevance in the era of generative AI. When we depend on a machine to generate our thoughts, answer our questions, or write our essays, what happens to the neural pathways that govern our own critical thinking? And will the upcoming era of agentic AI expedite this decline?

Cognitive function, like physical fitness, follows the rule of “use it or lose it.” Just as muscles weaken without regular use, the brain’s ability to evaluate, synthesize, and critique information can atrophy when not exercised. This is especially concerning in the context of education, where young learners are still building those critical neural pathways.

In short: Students need to learn how to think before they delegate that thinking to a machine.

Can you still think critically with AI?

Yes, but only if you’re intentional about it.

AI doesn’t relieve you of the responsibility to think–in many cases, it demands even more critical thinking. AI produces hallucinations, falsifies claims, and can be misleading. If you blindly accept AI’s output, you’re not saving time, you’re surrendering clarity.

Using AI effectively requires discernment. You need to know what you’re asking, evaluate what you’re given, and verify the accuracy of the result. In other words, you need to think before, during, and after using AI.

The “source, please” problem

One of the simplest ways to teach critical thinking is also the most annoying–just ask my teenage daughter. When she presents a fact or claim that she saw online, I respond with some version of: “What’s your source?” It drives her crazy, but it forces her to dig deeper, check assumptions, and distinguish between fact and fiction. It’s an essential habit of mind.

But here’s the thing: AI doesn’t always give you the source. And when it does, sometimes it’s wrong, or the source isn’t reputable. Sometimes it requires a deeper dive (and a few more prompts) to find answers, especially to complicated topics. AI often provides quick, confident answers that fall apart under scrutiny.

So why do we keep relying on it? Why are AI responses allowed to settle arguments, or serve as “truth” for students when the answers may be anything but?

The lure of speed and simplicity

It’s easier. It’s faster. And let’s face it: It feels like thinking. But there’s a difference between getting an answer and understanding it. AI gives us answers. It doesn’t teach us how to ask better questions or how to judge when an answer is incomplete or misleading.

This process of cognitive offloading (where we shift mental effort to a device) can be incredibly efficient. But if we offload too much, too early, we risk weakening the mental muscles needed for sustained critical thinking.

Implications for educators

So, what does this mean for the classroom?

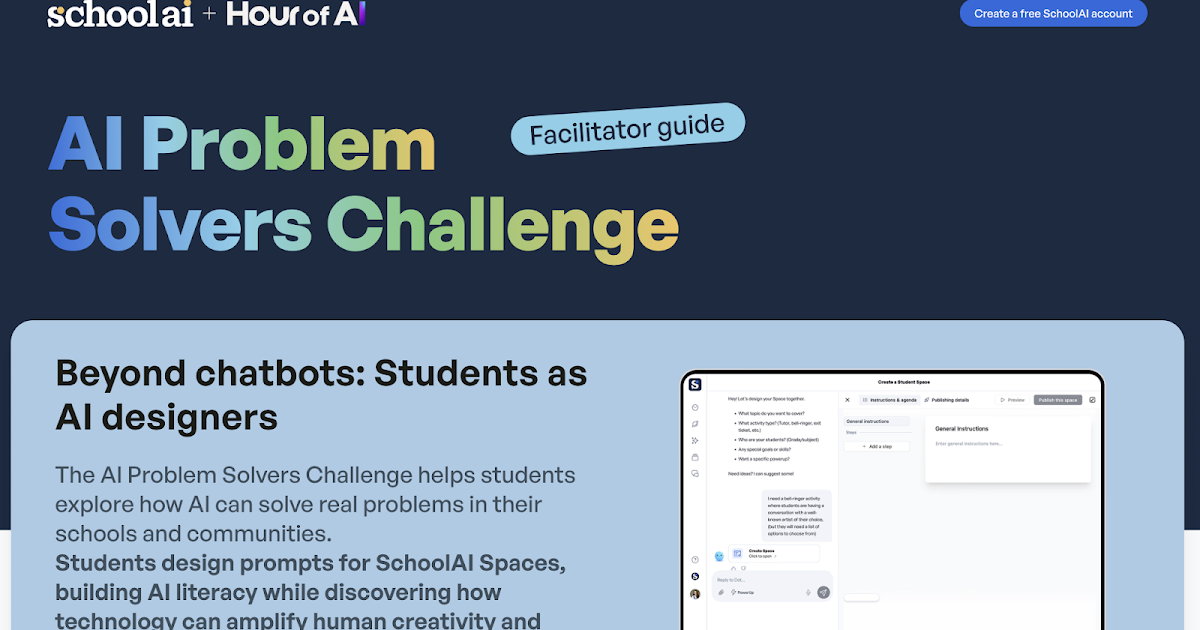

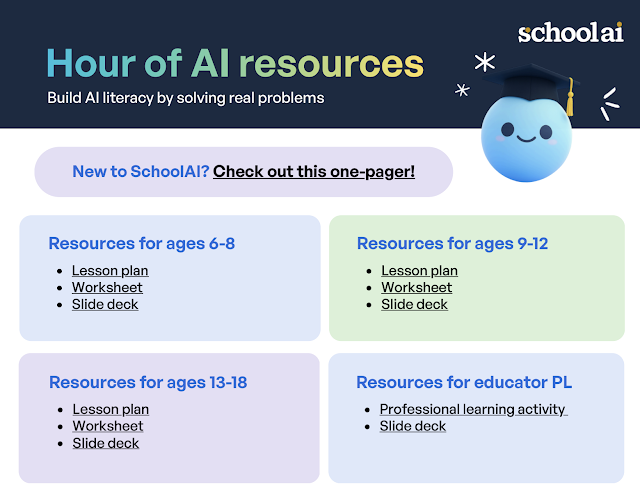

First, educators must be discerning about how they use AI tools. These technologies aren’t going away, and banning them outright is neither realistic nor wise. But they must be introduced with guardrails. Students need explicit instruction on how to think alongside AI, not instead of it.

Second, teachers should emphasize the importance of original thought, iterative questioning, and evidence-based reasoning. Instead of asking students to simply generate answers, ask them to critique AI-generated ones. Challenge them to fact-check, source, revise, and reflect. In doing so, we keep their cognitive skills active and growing.

And finally, for young learners, we may need to draw a harder line. Students who haven’t yet formed the foundational skills of analysis, synthesis, and evaluation shouldn’t be skipping those steps. Just like you wouldn’t hand a calculator to a child who hasn’t yet learned to add, we shouldn’t hand over generative AI tools to students who haven’t learned how to write, question, or reason.

A tool, not a crutch

AI is here to stay. It’s powerful, transformative, and, when used well, can enhance our work and learning. But we must remember that it’s a tool, not a replacement for human thought. The moment we let it think for us is the moment we start to lose the capacity to think for ourselves.

If we want the next generation to be capable, curious, and critically-minded, we must protect and nurture those skills. And that means using AI thoughtfully, sparingly, and always with a healthy dose of skepticism. AI is certainly proving it has staying power, so it’s in all our best interests to learn to adapt. However, let’s adapt with intentionality, and without sacrificing our critical thinking skills or succumbing to any form of digital dementia.

Laura Hakala, Magic EdTech

Laura Hakala is the Director of Online Program Design and Efficacy for Magic EdTech. With nearly two decades of leadership and strategic innovation experience, Laura is a go-to resource for content, problem-solving, and strategic planning. Laura is passionate about DE&I and is a fierce advocate, dedicated to making meaningful changes. When it comes to content management, digital solutions, and forging strategic partnerships, Laura’s expertise shines through. She’s not just shaping the future; she’s paving the way for a more inclusive and impactful tomorrow.

Latest posts by eSchool Media Contributors

(see all)