According to data highlighted by Immigration New Zealand (INZ), the government agency responsible for managing the country’s immigration system, the first 10 months of 2025 saw 55,251 study visa applications, down from 58,361 in the same period last year.

However, approval rates have risen sharply. In 2024, INZ approved 42,724 of 58,361 applications (81.5%) and declined 9,161 (17.5%). Meanwhile, in 2025, despite fewer applications at 55,251, approvals rose to 43,203 (88.2%) with 5,317 declined (10.9%).

NZ sets itself apart from other key study destinations

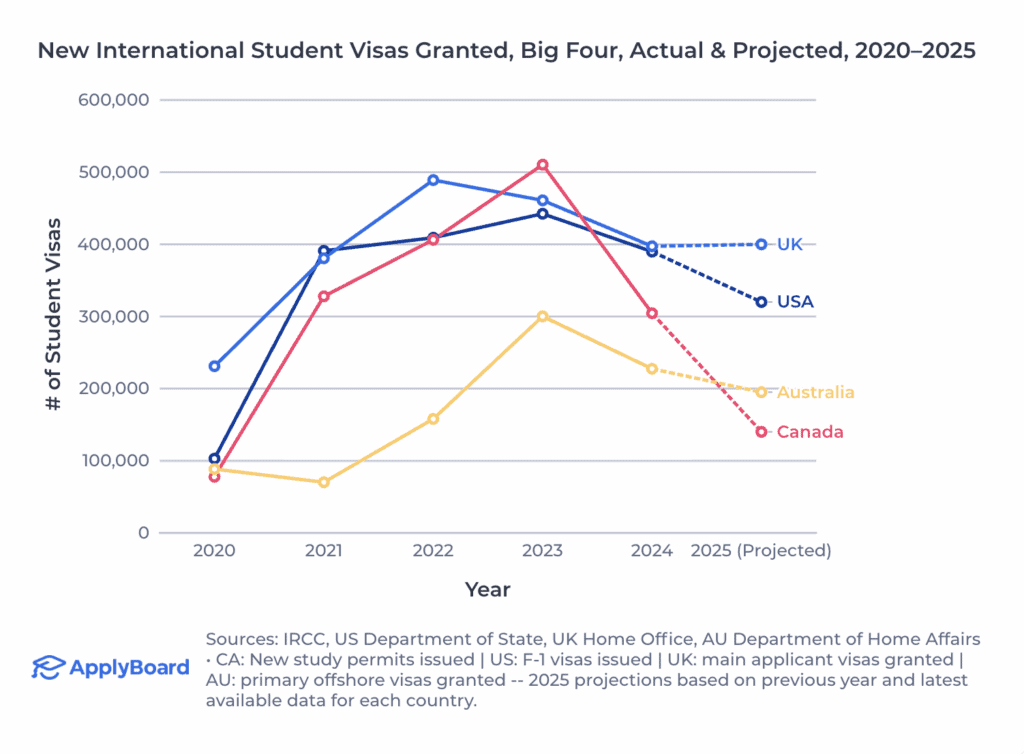

Even as major anglophone study destinations take a cautious approach to international education policy, New Zealand is aiming to be an outlier in the market.

The country is looking to boost international student enrolments from 83,700 to 119,000 by 2034 and double the sector’s value to NZD$7.2 billion (GBP £3.2bn) under the recently launched International Education Going for Growth plan.

This month, new rules came into effect allowing eligible international tertiary and secondary students with visas from November 3 to work up to 25 hours a week, up from 20, while a new short-term work visa for some vocational graduates is also expected to be introduced soon.

“As part of the International Education Going for Growth Plan, changes were announced to immigration settings to support sustainable growth and enhance New Zealand’s appeal as a study destination. These changes aim to maintain education quality while managing immigration risk,” Celia Coombes, director of visas for INZ, told The PIE.

“Immigration New Zealand (INZ) and Education New Zealand (ENZ) work in close partnership to achieve these goals.”

We have more students applying for Pathway Visas year on year, which means more visas granted for longer periods, and less ‘year by year’ applications

Celia Coombes, Immigration New Zealand

Why the drop in study visa applications?

While study visa approval rates have skyrocketed over the past year — a stark contrast to the Covid period, when universities across New Zealand faced massive revenue losses owing to declining numbers — stakeholders point to a mix of factors behind the drop in new applications.

“There has been an increase in approvals, but overall, a slight decrease in the number of students applying for a visa. However, interest in New Zealand continues to grow,” stated Coombes, who added that the number of individuals holding a valid study visa rose to 58,192 in August 2025, up from 45,512 a year earlier.

“We have more students applying for Pathway Visas year on year, which means more visas granted for longer periods, and less ‘year by year’ applications.”

While multi-year pathway visas can cover a full planned study path, reducing the need for repeated applications, Richard Kensington, an NZ-based international education consultant, says refinements could make the route more effective in attracting international students.

“The Pathway Visa, introduced nearly a decade ago as a trial, has never been fully expanded. Although reviews are complete and the scheme is set to become permanent, no additional providers have been given access,” stated Kensington.

“Simple refinements — such as allowing pathways to a broad university degree rather than a specific named programme — would encourage more students to utilise this route.”

The drop could also be linked to the underdeveloped school sector and the slower recovery of New Zealand’s vocational education sector, as noted by Kensington.

“The school sector remains one of New Zealand’s most untapped international education markets. Demand is growing, especially from families where a parent wishes to accompany the student. The Guardian Parent Visa makes that a viable option,” stated Kensington.

“Vocational education hasn’t rebounded in the same way. The loss of work rights for sub-degree diplomas has significantly reduced demand from traditional migration markets.”

New Zealand’s vocational education woes

Just this year, the New Zealand government announced the disestablishment of Te Pūkenga, the country’s largest vocational education provider, formed through the merger of 16 Institutes of Technology and Polytechnics.

It is being replaced by 10 standalone polytechnics, following concerns that the model had become too costly and centralised.

“Te Pūkenga’s rise and fall created real confusion offshore. With standalone polytechnics returning, we should see greater stability from 2026 onwards,” Kensington added.

“Many polytechnics are now relying on degree and master’s programmes, putting them in more direct competition with universities.”

Applications fall in China, climb in India

As per data shared by INZ on decided applications across both 2024 and 2025 — including on ones submitted in earlier years — countries like India (+2.7%), Nepal (+26.8%), Germany (+5.2%), and the Philippines (+7.8%) have seen growth in the number of study visas approved.

Meanwhile, many East and Southeast Asian markets have recorded year-on-year declines, most notably the largest sending market, China, which dropped by 9.9%.

The data shows that while 16,568 study visas were approved for China in January–October 2024, this fell to 14,929 in 2025 though it remains the largest source country.

Other markets such as Japan (-9.7%), South Korea ( -24.8%), and Thailand (-33.7%) also saw significant declines.

According to Frank Xing, director of marketing and operations at Novo Education Consulting, the slowdown from China is clear, with weaker student interest reflected in both their enquiries and feedback from partners, and echoed by some New Zealand institutions.

“It’s a mixed picture — a few schools, particularly in the secondary sector, are still doing well, but many providers are starting to feel the impact,” stated Xing, who believes several factors are driving the slowdown.

“The first is the weaker Chinese economy — many families have been affected by job losses or lower business income. In the past, property assets often helped families fund overseas study, but the real estate downturn has reduced that flexibility,” he added, also noting New Zealand’s own unemployment challenges and competition from lower-cost destinations.

“We’ve actually seen some students abandon their New Zealand study plans or switch to more affordable destinations such as Malaysia or parts of Europe.”

According to Xing, while China remains one of New Zealand schools’ strongest markets, this could change as Chinese families place greater emphasis on career outcomes — an area where New Zealand’s slower job market remains a challenge.

He added that New Zealand’s role as the 2025 Country of Honour at China’s premier education expo could help raise awareness among prospective students.

False applications remain a major concern

For Education New Zealand and INZ, the more immediate challenge now lies in addressing fraudulent applications, according to Coombes.

“New Zealand sees a lot of false financial documents. To address this and help ensure students have the money they need to live and study in New Zealand, we are improving processes to maintain integrity and streamline processing,” stated Coombes.

“This includes expanding the Funds Transfer Scheme, where students deposit their living costs in New Zealand, and they are released monthly.”

According to Kensington, some agencies across South Asia and likely parts of Africa, where New Zealand has limited representation may not meet required standards, creating challenges. However, he believes improved processing is reducing the impact.

“INZ only accepts financial evidence from specific banks in some jurisdictions. Student loans must be secured; unsecured loans aren’t accepted even from major banks,” stated Kensington.

“It’s hard to say whether fraud is increasing, but the rise in high-quality applications means INZ can process many files quickly and devote more time to forensic checks where needed.”