Get stories like this delivered straight to your inbox. Sign up for The 74 Newsletter

This spring, the National Institutes of Health quietly began terminating programs at scores of colleges that prepared promising undergraduate and graduate students for doctoral degrees in the sciences.

At least 24 University of California and California State University campuses lost training grants that provided their students with annual stipends of approximately $12,000 or more, as well as partial tuition waivers and travel funds to present research at science conferences. The number of affected programs is likely higher, as the NIH would not provide CalMatters a list of all the cancelled grants.

Cal State San Marcos, a campus in north San Diego County with a high number of low-income learners, is losing four training grants worth about $1.8 million per year. One of the grants, now called U-RISE, had been awarded to San Marcos annually since 2001. San Marcos students with U-RISE stipends were often able to forgo part-time jobs, which allowed them to concentrate on research and building the skills needed for a doctoral degree.

The cuts add to the hundreds of millions of dollars of grants the agency has cancelled since President Donald Trump took office for a second term.

To find California campuses that lost training grants, CalMatters looked up known training grants in the NIH search tool to see if those grants were still active. If the grant’s award number leads to a broken link, that grant is dead, a notice on another NIH webpage says.

The NIH web pages for the grants CalMatters looked up, including U-RISE, are no longer accessible. Some campuses, including San Marcos, Cal State Long Beach, Cal State Los Angeles and UC Davis, have updated their own websites to state that the NIH has ended doctoral pathway grants.

“We’re losing an entire generation of scholars who wouldn’t have otherwise gone down these pathways without these types of programs,” said Richard Armenta, a professor of kinesiology at San Marcos and the associate director of the campus’s Center for Training, Research, and Educational Excellence that operates the training grants.

At San Marcos, 60 students who were admitted into the center lost grants with stipends, partial tuition waivers and money to travel to scientific conferences to present their findings.

From loving biology to wanting a doctoral degree

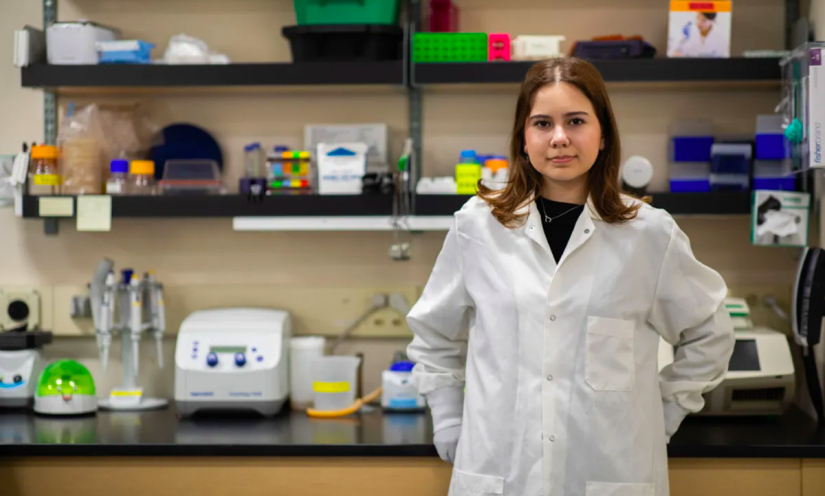

Before the NIH terminations, Marisa Mendoza, a San Marcos undergraduate, received two training grants. As far back as middle school, Mendoza’s favorite subjects were biology and chemistry.

To save money, she attended Palomar College, a nearby community college where she began to train as a nurse. She chose that major because it would allow her to focus on the science subjects she loved. But soon Mendoza realized she wanted to do research rather than treat patients.

At Palomar, an anatomy professor introduced her to the NIH-funded Bridges to the Baccalaureate, a training grant for community college students to earn a bachelor’s and pursue advanced degrees in science and medicine.

“I didn’t even know what grad school was at the time,” she said. Neither of her parents finished college.

The Bridges program connected her to Cal State San Marcos, where she toured different labs to find the right fit. At the time she was in a microbiology course and found a lab focused on bacteria populations in the nearby coastal enclaves. The lab was putting into practice what she was learning in the abstract. She was hooked.

“It just clicked, like me being able to do this, it came very easily to me, and it was just something that I came to be very passionate about as I was getting more responsibility in the lab,” Mendoza said.

From Palomar she was admitted as a transfer student to San Marcos and more selective campuses, including UCLA and UC San Diego. She chose San Marcos, partly to live at home but also because she loved her lab and wanted to continue her research.

She enrolled at San Marcos last fall and furthered her doctoral journey by receiving the U-RISE grant. It was supposed to fund her for two years. The NIH terminated the grant March 31, stripping funds from 20 students.

For a school like San Marcos, where more than 40% of students are low-income enough to receive federal financial aid called Pell grants, the loss of the NIH training awards is a particular blow to the aspiring scientists.

The current climate of doctoral admissions is “definitely at a point where one needs prior research experience to be able to be competitive for Ph.D. programs,” said Elinne Becket, a professor of biological sciences at Cal State San Marcos who runs the microbial ecology lab where Mendoza and other students hone their research for about 15 hours a week.

San Marcos doesn’t have much money to replace its lost grants, which means current and future San Marcos students will “100%” have a harder time entering a doctoral program, Becket added. “It keeps me up at night.”

Research is ‘a missing piece’

In a typical week in Becket’s lab, Mendoza will drive to a nearby wetland or cove to retrieve water samples — part of an ongoing experiment to investigate how microbial changes in the ecosystem are indications of increased pollution in sea life and plants. Sometimes she’ll wear a wetsuit and wade into waters a meter deep.

The next day she’ll extract the DNA from bacteria in her samples and load those into a sequencing machine. The sequencer, which resembles a small dishwasher, packs millions or billions of pieces of DNA onto a single chip that’s then run through a supercomputer a former graduate student built.

“Once I found research, it was like a missing piece,” Mendoza, a Pell grant recipient, said through tears during an interview at Cal State Marcos. Research brought her joy and consumed her life “in the best way,” she added. “It’s really unfortunate that people who are so deserving of these opportunities don’t get to have these opportunities.”

The origins of the San Marcos training center date back to 2002. Through it, more than 160 students have either earned or are currently pursuing doctoral degrees at a U.S. university.

The grant terminations have been emotionally wrenching. “There had been so many tears in my household that my husband got me a puppy,” said Denise Garcia, the director of the center and a professor of biological sciences.

Garcia recalls that in March she was checking a digital chat group on Slack with many other directors of U-RISE grants when suddenly the message board lit up with updates that their grants were gone. At least 63 schools across the country lost their grants, NIH data show.

In the past four years of its U-RISE grant the center has reported to the NIH that 83% of its students entered a doctoral program. That exceeds the campus’s grant goal, which was 65% entering doctoral programs.

Mendoza is grateful: She was one of two students to win a campus scholarship that’ll defray much, but not all, of the costs of attending school after losing her NIH award. That, plus a job at a pharmacy on weekends, may provide enough money to complete her bachelor’s next year.

Others are unsure how they’ll afford college while maintaining a focus on research in the next school year.

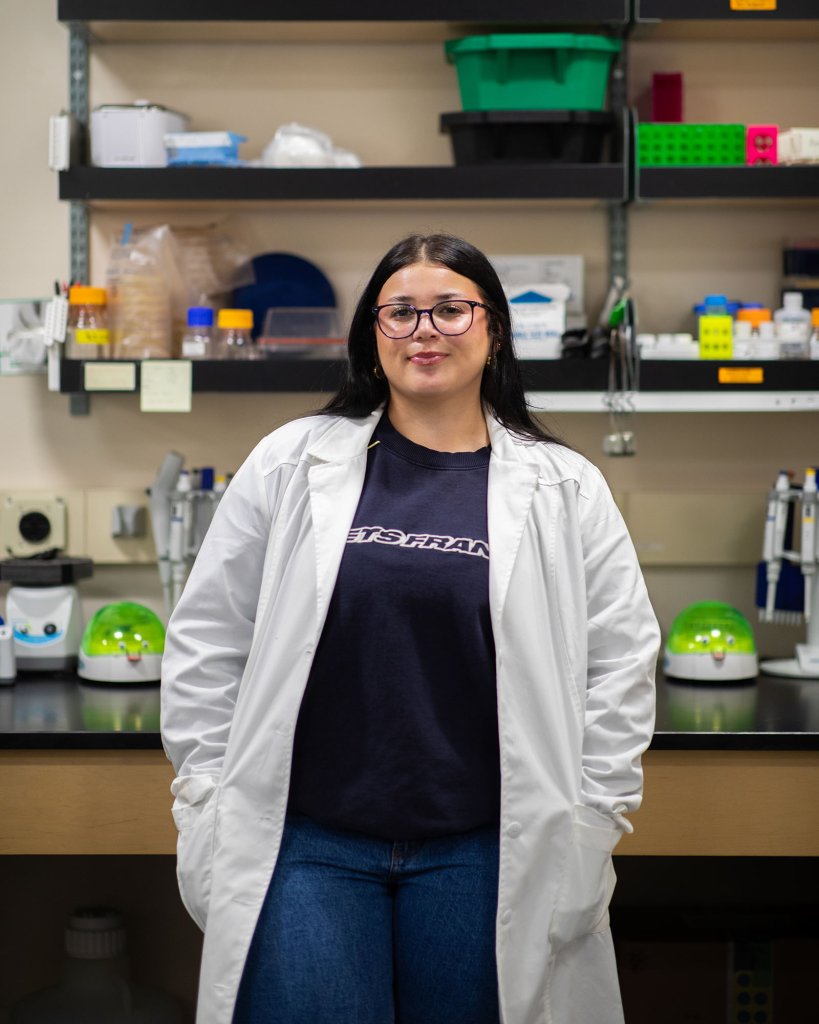

“You work so hard to put yourself in a position where you don’t have to worry, and then that’s taken away from you,” said Camila Valderrama-Martínez, a first-year graduate student at San Marcos who also earned her bachelor’s there and works in the same lab as Mendoza. She was in her first year of receiving the Bridges to the Doctorate grant meant for students in master’s programs who want to pursue a biomedical-focused doctoral degree. The grant came with a stipend of $26,000 annually for two years plus a tuition waiver of 60% and money to attend conferences.

She can get a job, but that “takes away time from my research and my time in lab and focusing on my studies and my thesis.” She relies solely on federal financial aid to pay for school and a place to live. Getting loans, often anathema for students, seems like her only recourse. “It’s either that or not finish my degree,” she said.

Terminated NIH grants in detail

These grant cancellations are separate from other cuts at the NIH since Trump took office in January, including multi-million-dollar grants for vaccine and disease research. They’re also on top of an NIH plan to dramatically reduce how much universities receive from the agency to pay for maintaining labs, other infrastructure and labor costs that are essential for campus research. California’s attorney general has joined other states led by Democrats in suing the Trump administration to halt and reverse those cuts.

In San Marcos’ case, the latest U-RISE grant lasted all five years, but it wasn’t renewed for funding, even though the application received a high score from an NIH grant committee.

Armenta, the associate director at the Cal State San Marcos training center, recalled that his NIH program officer said that though nothing is certain, he and his team should be “cautiously optimistic that you would be funded again given your score.” That was in January. Weeks later, NIH discontinued the program.

He and Garcia shared the cancellation letters they received from NIH. Most made vague references to changes in NIH’s priorities. However, one letter for a specific grant program cited a common reason why the agency has been cancelling funding: “It is the policy of NIH not to prioritize research programs related to Diversity (sic), equity, and inclusion.”

That’s a departure from the agency’s emphasis on developing a diverse national cadre of scientists. As recently as February, the application page for that grant said “there are many benefits that flow from a diverse scientific workforce.”

Future of doctoral programs unclear

Josue Navarrete graduated this spring from Cal State San Marcos with a degree in computer science. Unlike the other students interviewed for this story, Navarrete, who uses they/them pronouns, was able to complete both years of their NIH training grant and worked in Becket’s lab.

But because of the uncertain climate as the Trump administration attempts to slash funding, Vanderbilt University, which placed Navarrete on a waitlist for a doctoral program, ultimately denied them admission because the university program had to shrink its incoming class, they said. Later, Navarrete met a professor from Vanderbilt at a conference who agreed to review their application. The professor said in any other year, Navarrete would have been admitted.

The setback was heartbreaking.

“I’m gripping so hard to stay in research,” Navarrete said. With doctoral plans delayed, they received a job offer from Epic, a large medical software company, but turned it down. “They wanted me to be handling website design and mobile applications, and that’s cool. It’s not for me.”

Valderrama-Martinez cited Navarrete’s story as she wondered whether doctoral programs at universities will have space for her next year. “I doubt in a year things are going to be better,” she said.

She still looks forward to submitting her applications.

So does Mendoza. She wants to study microbiology — the research bug that bit her initially and brought her to San Marcos. Eventually she hopes to land at a private biotech firm and work in drug development.

“Of course I’m gonna get a Ph.D., because that just means I get to do research,” she said.

This article was originally published on CalMatters and was republished under the Creative Commons Attribution-NonCommercial-NoDerivatives license.

Get stories like these delivered straight to your inbox. Sign up for The 74 Newsletter