Get stories like this delivered straight to your inbox. Sign up for The 74 Newsletter

Every week, my 7-year-old brings home worksheets with math problems and writing assignments. But what captivates me is what he creates on the back once the assigned work is done: power-ups for imaginary games, superheroes with elaborate backstories, landscapes that evolve weekly. He exists in a beautiful state of discovery and joy, in the chrysalis before transformation.

My son shows me it’s possible to discover something remarkable when we expand what we consider possible. Yet in education, a system with 73% public dissatisfaction and just 35% satisfied with K-12 quality, we hit walls repeatedly.

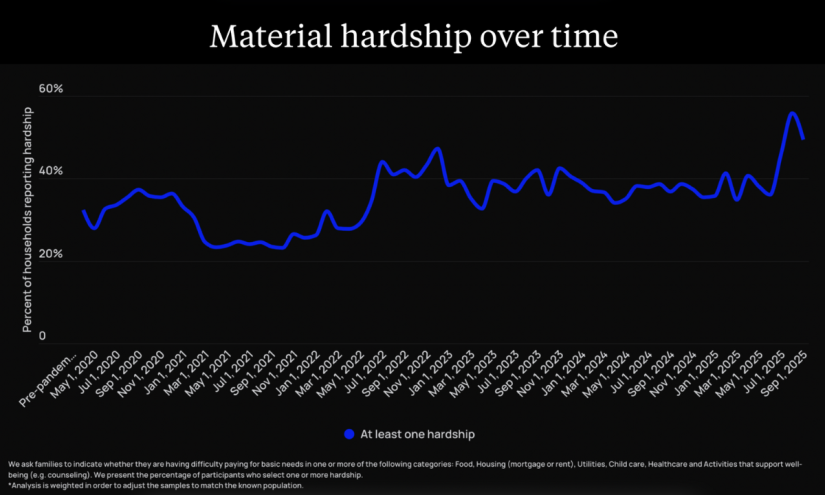

This inertia contributes to our current moment: steep declines in reading and math proficiency since 2019, one in eight teaching positions unfilled or filled by uncertified teachers, and growing numbers abandoning public education.

Contrast this with artificial intelligence’s current trajectory.

AI faces massive uncertainty. Nobody knows where it leads or which approaches will prove most valuable. Ethical questions around bias, privacy and accountability remain unresolved.

Yet despite uncertainty — or because of it — nearly every industry is doubling down. Four major tech firms planned $315 billion in AI spending for 2025 alone. AI adoption surged from 55% to 78% of organizations in one year, with 86% of employers expecting AI to transform their businesses by 2030.

This is a gold rush. Entire ecosystems are seeing transformational potential and refusing to be left behind. Organizations invest not despite uncertainty, but because standing still carries greater risk.

There’s much we can learn from the AI-fueled momentum.

To be clear, this isn’t an argument about AI’s merits. This is a conversation about what becomes possible when people come together around shared aspirations to restore hope, agency and possibility to education. AI’s approach reveals five guiding principles that education leaders should follow:

1. Set a Bold Vision: AI leaders speak in radical terms. Education needs such bold aspirations, not five percent improvements. Talk about 100% access, 100% thriving, 100% success. Young people are leading by demanding approaches that honoring their agency, desire for belonging, and broad aspirations. We need to follow their lead.

2. Play the Long Game: Companies make massive investments for transformation they may not see for years. Education must embrace the same long-term thinking: investing in teacher development programs that mature over years, reimagining curricula for students’ distant futures, building systems that support sustainable excellence over immediate political wins.

3. Don’t Fear Mistakes: AI adoption is rife with failure and course corrections. Despite rapid belief and investment, over 80% of AI projects fail. Yet companies continue experimenting, learning, adjusting and trying again because they understand that innovation requires iteration. Education must take bold swings, have honest debriefs when things fall flat, adjust and move forward.

4. Democratize Access: AI reached 1.7 to 1.8 billion users globally in 2025. While quality varies and significant disparities exist, fundamental access has been opened up in ways that seemed impossible just years ago. When it comes to transformative change in education, every child deserves high-quality teachers, engaging curriculum and flourishing environments.

5. Own the Story, and Pass the Mic: Every day, AI gains new ambassadors among everyday people, inspiring others to jump in. The most powerful education stories come from young people discovering breakthroughs during light bulb moments, from parents seeing children thrive, from teachers witnessing walls coming down and possibilities surpassing imagination. We need to pass the mic, creating platforms for students to share what meaningful learning looks like, which will unlock aspirational stories that shift the system.

None of this is possible without student engagement. When students have voice and agency, believe in learning’s relevance and feel supported, transformative outcomes follow. As CEO of Our Turn, I was privileged to be part of efforts that inspired leaders and institutions across the country to invest in student engagement as a core strategy. We’re now seeing progress: all eight measures of school engagement tracked by Gallup reached their highest levels in 2025. This is an opportunity to build positive momentum; research consistently demonstrates engagement relates to academic achievement, post-secondary readiness, critical thinking, persistence and enhanced mental health.

Student engagement is the foundation from which all other educational outcomes flow. When we center student voice, we go from improving schools to galvanizing the next generation of engaged citizens and leaders our democracy desperately needs.

High-quality teachers are also essential. Over 365,000 positions are filled by uncertified teachers, with 45,500 unfilled. Teachers earn 26.4% less than similarly educated professionals. About 90% of vacancies result from teachers leaving due to low salaries, difficult conditions or inadequate support.

Programs like Philadelphia’s City Teaching Alliance prove what’s possible: over 90% of new teachers returned after 2023-24, versus just under 80% citywide. We must create conditions where teaching is sustainable and honored through higher salaries, better working conditions, meaningful professional development and cultures that value educators as professionals.

Investing in teacher quality is fundamental to workforce development, economic competitiveness and ensuring every child has access to excellent instruction. When we frame this as both a moral imperative and an economic necessity, we create the coalition necessary for lasting change.

Finally, transformation must focus on skill development. The workforce young people are entering demands more than technical knowledge; it requires integrated capabilities for navigating complexity, building authentic relationships and creating meaningful change.

At Harmonious Leadership, we’ve worked with foundations and organizations to develop leadership skills that result in greater innovation and impact. Our goals: young people more engaged in school and communities, and companies reporting greater levels of innovation, impact and financial sustainability.

The appeal here is undeniable. Workforce development consistently ranks among the top priorities across political divides. Given the rapid rate of change in our culture and economy, we need to develop skills for careers that don’t yet exist, for challenges we can’t yet imagine, for a world that demands creativity, adaptability and resilience.

The AI gold rush shows what’s possible when we set bold visions, invest for the long term, embrace learning from failure, democratize access and amplify voices closest to transformation.Our children, like my son drawing superheroes on worksheet backs, are in chrysalis moments. The choice is ours: remain paralyzed by complexity or channel the same urgency, investment and unity of purpose driving the AI revolution. We know what works: student engagement, quality teachers and future-ready skills. The question isn’t whether we have solutions. It’s whether we have courage to pursue them.

Did you use this article in your work?

We’d love to hear how The 74’s reporting is helping educators, researchers, and policymakers. Tell us how