(Image: Mass General is Harvard University Medical School’s teaching hospital.)

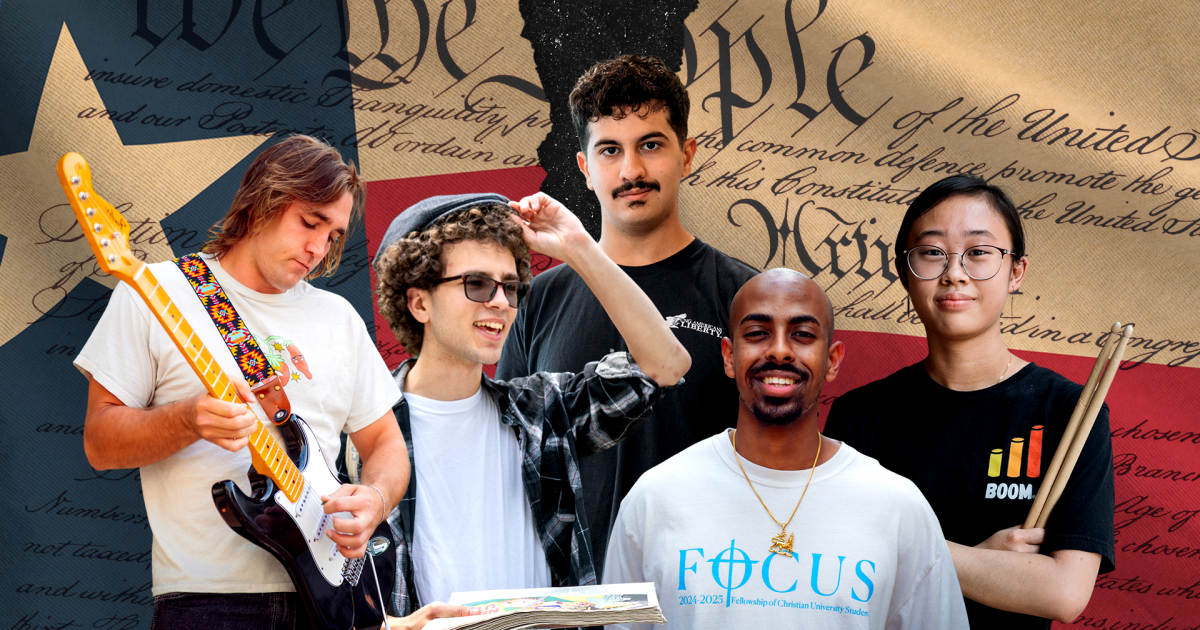

For decades, America’s elite university medical centers have been the epitome of healthcare research and innovation, providing world-class treatment, education, and cutting-edge medical advancements. Yet, beneath this polished surface lies a troubling legacy of medical exploitation, systemic inequality, and profound injustice—one that disproportionately impacts marginalized communities. While the focus has often been on racial disparities, this issue is not solely about race; it is also deeply entangled with class. In recent years, books like Medical Apartheid by Harriet Washington have illuminated the history of medical abuse, but they also serve as a reminder that inequality in healthcare goes far beyond race and touches upon the economic and social circumstances of individuals.

The term Medical Apartheid, as coined by Harriet Washington, refers to the systemic and institutionalized exploitation of Black Americans in medical research and healthcare. Washington’s work examines the history of Black Americans as both victims of medical experimentation and subjects of discriminatory practices that have left deep scars within the healthcare system. Yet, the complex interplay between race and class means that many poor or economically disadvantaged individuals, regardless of race, have also faced neglect and exploitation within these prestigious medical institutions. The legacy of inequality within elite university medical centers, therefore, is not limited to race but is also an issue of class disparity, where wealthier individuals are more likely to receive proper care and access to cutting-edge treatments while the poor are relegated to substandard care.

Historical examples of exploitation and abuse in medical centers are well-documented in Washington’s work, and contemporary lawsuits and investigations reveal that these systemic problems still persist. Poor patients, especially those from marginalized racial backgrounds, are often viewed as expendable research subjects. The lawsuit underscores the intersectionality of race and class, arguing that these patients’ socio-economic status exacerbates their vulnerability to medical exploitation, making it easier for institutions to treat them as less than human, especially when they lack the resources or power to contest medical practices.

One of the most critical components of this issue is the stark contrast in healthcare access between the wealthy and the poor. While elite university medical centers boast state-of-the-art facilities, cutting-edge treatments, and renowned researchers, these resources are often not equally accessible to all. Wealthier patients are more likely to have the financial means to receive the best care, not just because of their ability to pay but because they are more likely to be referred to these prestigious centers. Conversely, low-income patients, especially those without insurance or with inadequate insurance, are often forced into overcrowded public hospitals or community clinics that are underfunded, understaffed, and unable to provide the level of care available at elite institutions.

The issue of class inequality within medical care is evident in several key areas. For instance, studies have shown that low-income patients, regardless of race, are less likely to receive timely and appropriate medical care. A 2019 report from the National Academy of Medicine found that low-income patients are often dismissed by healthcare professionals who underestimate the severity of their symptoms or assume they are less knowledgeable about their own health. In addition, patients from lower socio-economic backgrounds are more likely to experience medical debt, which can lead to long-term financial struggles and prevent them from seeking care in the future.

Moreover, class plays a significant role in the underrepresentation of poor individuals in medical research, which is often conducted at elite university medical centers. Historically, clinical trials have excluded low-income participants, leaving them without access to potentially life-saving treatments or advancements. Wealthier individuals, on the other hand, are more likely to be invited to participate in research studies, ensuring they benefit from the very innovations and breakthroughs that these institutions claim to provide.

Class-based disparities are also reflected in the inequities in medical professions. The road to becoming a physician or researcher in these elite institutions is often paved with significant economic barriers. Medical students from low-income backgrounds face steep financial challenges, which can hinder their ability to gain acceptance into prestigious medical schools or pursue advanced research opportunities. Even when low-income students do manage to enter these programs, they often face biases and discrimination in clinical settings, where their abilities are unfairly questioned, and their economic status may prevent them from fully participating in research or other educational opportunities.

Yet, the inequities within these institutions don’t stop at the patients. Behind the scenes, workers at elite university medical centers, particularly those from working-class and marginalized backgrounds, face their own form of exploitation. These medical centers are not only spaces of high medical achievement but also sites of labor stratification, where workers in lower-paying roles are largely people of color and often immigrants. Support staff—such as janitors, food service workers, custodians, and administrative assistants—are often invisible but essential to the functioning of these hospitals and research institutions. These workers face long hours, poor working conditions, and low wages, all while contributing to the daily operations of elite medical centers. Many of these workers, employed through third-party contractors, lack benefits, job security, or protections, leaving them vulnerable to exploitation.

Custodial workers, who are often exposed to hazardous chemicals and physically demanding work, may struggle to make ends meet, despite playing a crucial role in maintaining the hospital environment. Similarly, food service workers—many of whom are Black, Latinx, or immigrant—also work in demanding conditions for low wages. These workers frequently face job insecurity and are not given the same recognition or compensation as the high-ranking physicians, researchers, or administrators in these centers.

At the same time, the stratification in these institutions extends beyond support staff. Medical researchers, residents, and postdoctoral fellows—often young, early-career individuals, many from working-class backgrounds or communities of color—are similarly subjected to precarious working conditions. These individuals perform much of the vital research that drives innovation at these centers, yet they often face exploitative working hours, low pay, and job insecurity. They are the backbone of the institution’s research output but frequently face barriers to advancement and recognition.

The higher ranks of these institutions—senior doctors, professors, and researchers—enjoy financial rewards, job security, and prestige, while those at the lower rungs continue to experience instability and exploitation. This division, which mirrors the economic and racial hierarchies of broader society, reinforces the very class-based inequalities these medical centers are meant to address.

In recent years, some progress has been made in addressing these inequalities. Many elite universities have implemented diversity and inclusion programs aimed at increasing access for underrepresented minority and low-income students in medical schools. Some institutions have also begun to emphasize the importance of cultural competence in training medical professionals, acknowledging the need to recognize and understand both racial and economic disparities in healthcare.

However, critics argue that these efforts, while important, are often superficial and fail to address the root causes of inequality. The institutional focus on “diversity” and “inclusion” often overlooks the more significant structural issues, such as the affordability of education, the class-based access to healthcare, and the economic barriers that continue to undermine the ability of disadvantaged individuals to receive quality care.

In addition to acknowledging racial inequality, it is crucial to tackle the broader issue of class within the healthcare system. The disproportionate number of Black and low-income individuals suffering from poor healthcare outcomes is a direct result of a system that privileges wealth and status over human dignity. To begin addressing these issues, we need to move beyond token diversity initiatives and work toward policy reforms that focus on economic access, insurance coverage, and the equitable distribution of medical resources.

Scholars like Harriet Washington, whose work documents the intersection of race, class, and healthcare inequality, continue to play a pivotal role in bringing attention to these systemic injustices. Washington’s book Medical Apartheid serves as a historical record but also as a call to action for creating a healthcare system that genuinely serves all people, regardless of race or socio-economic status. The fight for healthcare equity must, therefore, be a dual one—against both racial and class-based disparities that have long plagued our medical institutions.

The story of Henrietta Lacks, as told in The Immortal Life of Henrietta Lacks by Rebecca Skloot, exemplifies the longstanding exploitation of marginalized individuals in elite university medical centers. The case of Lacks, whose cells were taken without consent by researchers at Johns Hopkins University, brings to light both the historical abuse of Black bodies and the profit-driven nature of academic medical research. Johns Hopkins, one of the most prestigious medical centers in the world, has been complicit in the kind of exploitation and neglect that these institutions are often criticized for—issues that disproportionately affect not only Black Americans but also economically disadvantaged individuals.

The Black Panther Party’s healthcare activism, as chronicled by Alondra Nelson in Body and Soul, also directly challenges elite medical institutions’ failure to provide adequate care for Black and low-income communities. Nelson’s work reflects how, even today, these institutions are often slow to address the systemic issues of health disparities that activists like the Panthers fought against.

Recent lawsuits against elite medical centers further underscore the importance of holding these institutions accountable for their role in perpetuating medical exploitation and inequality. In An American Sickness by Elisabeth Rosenthal, the commercialization of healthcare is explored, highlighting how university hospitals and medical centers often prioritize profits over patient care, leaving low-income and marginalized groups with limited access to treatment. Rosenthal’s work highlights the role these institutions play in a larger system that disproportionately benefits wealthier patients while neglecting the most vulnerable.

A Global Comparison: Countries with Better Health Outcomes

While the United States struggles with systemic healthcare disparities, other nations have shown that equitable healthcare outcomes are possible when class and race are not barriers to care. Nations with universal healthcare systems, such as those in Canada, the United Kingdom, and many Scandinavian countries, consistently rank higher in overall health outcomes compared to the U.S.

For instance, Canada’s single-payer system ensures that all citizens have access to healthcare, regardless of their income. This system reduces the financial burdens that often lead to delays in care or avoidance of treatment due to costs. According to the World Health Organization, Canada has better health outcomes on a variety of metrics, including life expectancy and infant mortality, compared to the U.S., where medical costs often lead to unequal access to care.

Similarly, the United Kingdom’s National Health Service (NHS) provides healthcare free at the point of use for all citizens. Despite challenges such as funding constraints and wait times, the NHS has been successful in ensuring that healthcare is a right, not a privilege. The U.K. consistently ranks higher than the U.S. in terms of access to care, health outcomes, and overall public health.

Nordic countries, such as Norway and Sweden, also exemplify how universal healthcare can lead to better outcomes. These countries invest heavily in public health and preventative care, ensuring that even their most marginalized citizens receive the necessary medical services. The result is a population with some of the highest life expectancies and lowest rates of chronic diseases in the world.

These nations show that, while access to healthcare is a critical issue in the U.S., the challenge is not a lack of innovation or capability. Instead, it is the systemic barriers—both racial and economic—that persist in elite medical centers, undermining the potential for universal health equity. The U.S. could learn from these nations by adopting policies that reduce economic inequality in healthcare access and focusing on preventative care and public health strategies that serve all people equally.

Ultimately, the dark legacy of elite university medical centers is not something that can be erased, but it is something that must be acknowledged. Only by confronting this painful history, alongside addressing class-based disparities, can we begin to build a more just and equitable healthcare system—one that serves everyone, regardless of race, background, or socio-economic status. Until this happens, the distrust and skepticism that many marginalized communities feel toward these institutions will continue to shape the landscape of American healthcare. The path forward requires a concerted effort to address both racial and class-based inequities that have defined these institutions for far too long. The U.S. can, and must, strive for healthcare outcomes akin to those seen in nations that have built systems prioritizing equity and fairness—systems that put human dignity over profit.