The standout quote for me from new Office for Students (OfS) commissioned research on student consumer rights comes from a 21-year-old undergrad in a focus group:

If you were unhappy with your course, I don’t know how you’d actually say to them, ‘I want my money back, this was rubbish,’ basically. I don’t think that they would actually do that. It would just be a long, drawn-out process and they could just probably just argue for their own sake that your experience was your experience, other students didn’t agree, for example, on your course.

There’s a lot going on in there. It captures the power imbalance between students and institutions, predicts institutional defensiveness, anticipates bureaucratic obstacles, and reveals a kind of learned helplessness – this student hasn’t even tried to complain, and has already concluded it’s futile.

It’s partly about dissatisfaction with what’s being delivered, and a lack of clarity about their rights. But it’s also about students who don’t believe that raising concerns will achieve anything meaningful.

Earlier this year, the regulator asked Public First to examine students’ perceptions of their consumer rights, and here we have the results of a nationally representative poll of 2,001 students at providers in England, alongside two focus groups.

On the surface, things look pretty healthy – 83 per cent of students believe the information they received before enrolment was upfront, clear, timely, accurate, accessible and comprehensive, and the same proportion say their learning experience aligns with what they were promised.

But scratch a bit and we find a student body that struggles to distinguish between promises and expectations, that has limited awareness of their rights, that doesn’t trust complaints processes to achieve anything meaningful, and that is largely unaware of the external bodies that exist to protect them.

Whether you see this as a problem of comms, regulatory effectiveness, or student engagement probably depends on where you sit – but it’s hard to argue it represents a protection regime that’s working as intended.

Learning to be helpless

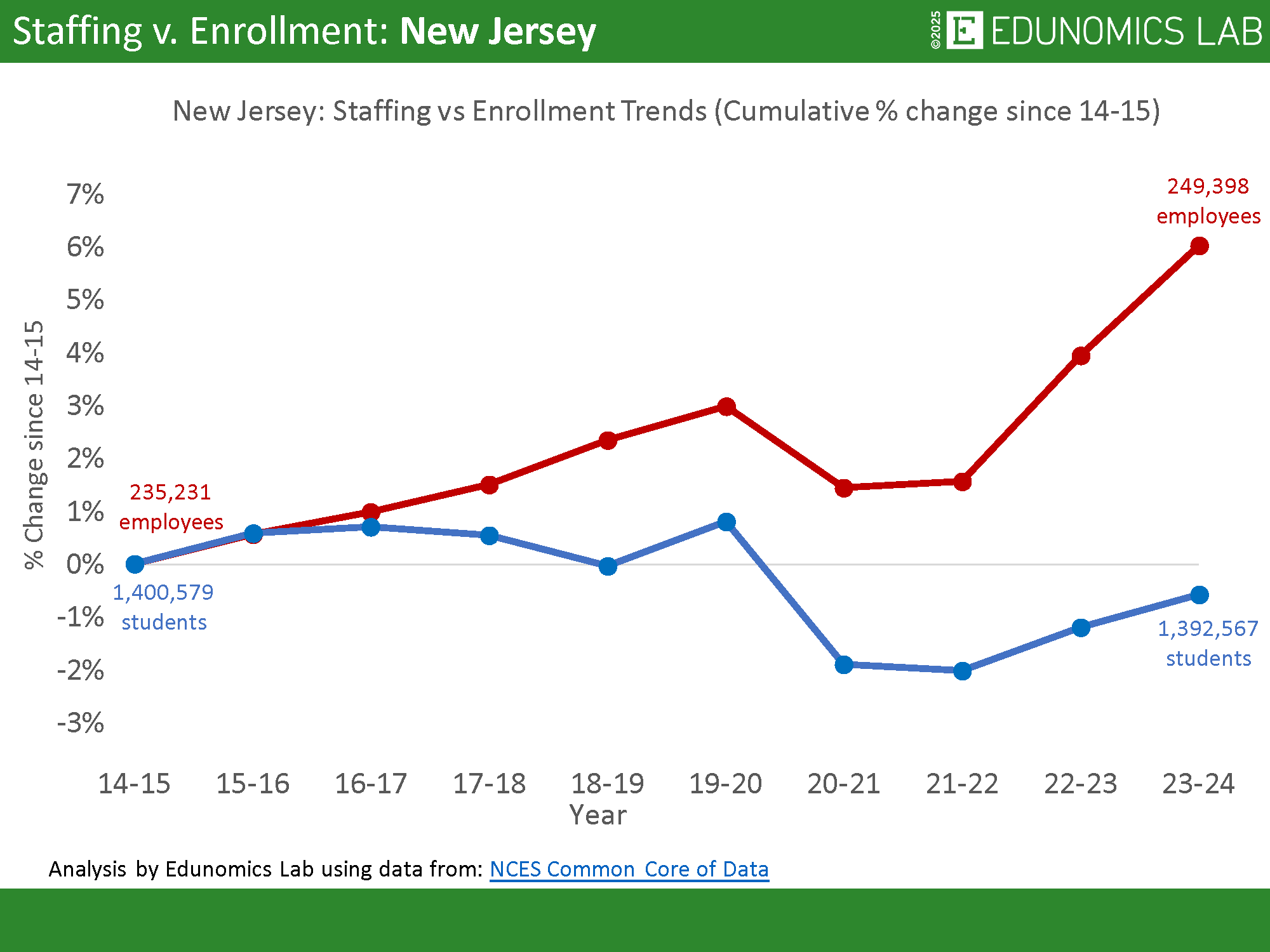

Research on complaints tends towards five interlocking barriers that prevent people from holding institutions and service providers to account – and each of them can be found in this data.

There’s opportunity costs (complaining takes time and energy), conflict aversion (people fear confrontation), confidence and capital (people doubt they have standing to complain), ignorance (people don’t know their rights), and fear of retribution (people worry about consequences). In this research, they combine to create an environment in which students who experience problems just put up with them.

![]()

When they were asked about the biggest barrier to making a complaint, the top answer was doubt that it would make a difference – cited by 36 per cent of respondents. The polling also found that 26 per cent of students said they have “no faith” that something would change if they raised a complaint, and around one in six students (17 per cent) disagreed with the statement “at my university, students have a meaningful say in decisions that affect their education.”

One postgrad described the experience of repeatedly raising concerns about poor organisation:

People also just don’t think anything’s going to happen if they make a complaint, like I don’t think it would. With my masters’, it was so badly organised at the start, like we kept turning up for lectures and people just wouldn’t turn up and things like that […] We had this group chat and we were all like, ‘What’s going on? We’re paying so much money for this,’ and […] it just seemed like no one knew what was going on, but we raised it to the rep to raise it to like one of the lecturers and then […] it would just still happen. So it’s like they’re not going to change it.

That’s someone who tried to work the system, followed the proper channels, raised concerns through the designated representative – and concluded it was futile.

The second most common barrier captures the opportunity costs thing – lack of time or energy to go through the process, cited by 35 per cent. Combined with doubting it would make a difference, we end up with a decent proportion of students who have cost-benefit analysed complaining and decided it’s not worth the effort. Domestic students were particularly likely to cite futility as a barrier – 41 per cent versus 25 per cent of international students.

They’ve learned helplessness – and only change their ways when failures impact their marks, only to find that “you should should have complained earlier” is the key response they’ll get when the academic appeal goes in.

Fear of retribution is also in there. About a quarter of students cited concern that complaining might affect their grades or relationships with staff (25-26 per cent) or said they felt intimidated or worried about possible consequences (23-26 per cent). A postgraduate put it bluntly:

I think people are scared of getting struck off their course.

Another student imagined what would happen if they tried to escalate to an external body:

I think [going to the OIA] would have to be a pretty serious thing to do, and I think that because it’s external to the university, I’d feel a little bit like a snitch. I would have to have a lot of evidence to back up what I’m saying, and I think that it would be a really long, drawn-out process, that I ultimately wouldn’t really trust would get resolved. And so I just wouldn’t really see it as worth it to make that complaint.

That’s the way it is

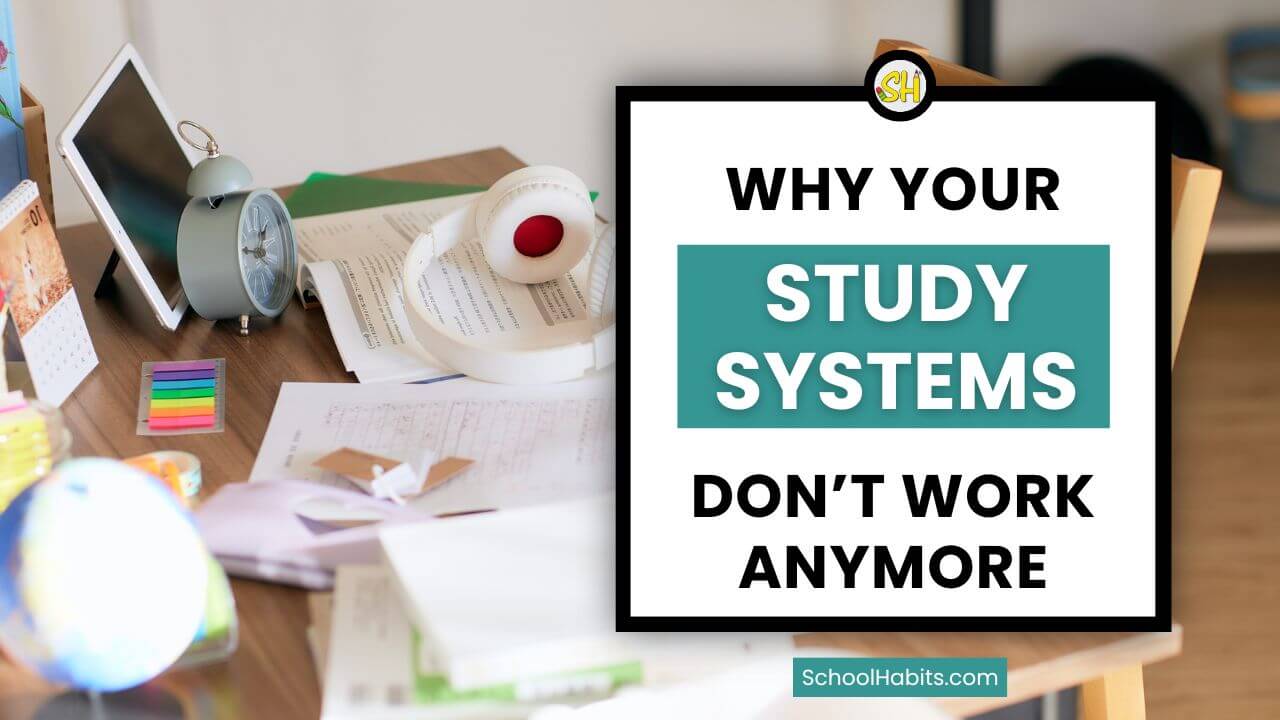

What are students accepting as just how things are? The two things students were most likely to identify as promises from their university were a well-equipped campus, facilities and accommodation (79 per cent) and high quality teaching and resources (78 per cent).

Over three-quarters of students said the promises made by their university had not been fully met – 59 per cent said they had been mostly met, 14 per cent partially met and 1 per cent not met at all, leaving just 24 per cent who thought promises had been fully met.

Yet fewer than half of respondents said these were “clear and consistent parts of their university experiences” – 42 per cent for physical resources and just 37 per cent for teaching and resources. In other words, the things students most clearly remember being promised are precisely the things that, for a large minority, show up as patchy, unreliable features of day-to-day university life rather than dependable fixtures.

![]()

There’s also a 41 percentage point gap between what students believe they were promised on teaching quality and what they report actually experiencing – 78 per cent say high quality teaching and resources were promised, but only 37 per cent say that kind of provision is a clear and consistent part of their experience. Public First note that “high quality” wasn’t explicitly defined in the polling, so these are students’ own judgements rather than a technical standard – but the size of the mismatch is still striking.

About a quarter of students (23 per cent) reported receiving lower quality teaching than expected, rising to 26 per cent among undergraduates. Twenty-two per cent experienced fewer contact hours and more online or hybrid teaching than expected, and twenty-one per cent reported limited access to academic staff.

One undergraduate described being taught by someone who made clear he didn’t want to be there:

One of our lecturers, he wasn’t actually a sports journalism lecturer, he’s just off the normal journalism course, and he made it pretty clear that he didn’t like any of us and he didn’t want to be there when he was teaching us. And we basically got told that we had to go and get on with it, pretty much. So there wasn’t any sort of solution of, ‘We’ll change lecturers,’ or anything, it’s just, ‘You’ll get in more trouble if you don’t go, so just get on with it and finish it.

When presented with a list of possible disruptions and asked which they’d experienced, 70 per cent identified at least one type. The most common was cancellation or postponement of in-person teaching, reported by 35 per cent of undergraduates. Industrial action affecting teaching or marking hit 20 per cent of students overall, and 16 per cent said it had significantly impacted their academic experience.

![]()

Limited support from academic staff affected 20 per cent overall, rising to one in four postgraduate students – and this was the disruption that students were most likely to say had significantly impacted their experience (23 per cent overall, climbing to 32 per cent among international students).

Telling is how dissatisfied students were with institutional responses to disruptions. Forty-two per cent said they were not that satisfied or not at all satisfied with their institution’s response to cancelled or postponed teaching – 45 per cent said the same about the response to strikes or industrial action. In other words, students experienced disruption, they weren’t happy with how it was handled, and yet most didn’t complain, because (again) they didn’t think it would achieve anything.

Informal v informant

Unsurprisingly, most students (65 per cent) had never lodged a formal complaint against their institution. On its face, that could look like satisfaction – if students aren’t complaining, perhaps things are generally fine. But when you dig into the reasons students give for not complaining, about one in four students (24 per cent) who hadn’t complained said they weren’t confident they’d know how to go about it – that’s the ignorance barrier.

And the bigger obstacles weren’t procedural – they were about believing it was pointless or fearing consequences.

When students did complain, they were at least twice as likely to have done so through informal channels (such as course representatives or conversations, 23 per cent) than through formal procedures (11 per cent). That’s your conflict aversion in action – you try the informal route first, see if you can get something fixed quietly without escalating to a formal process that might create confrontation.

But it also means the formal complaints processes that are supposed to provide accountability and redress (and documented institutional learning) are being bypassed by students who’ve concluded they’re not worth engaging with.

![]()

Among those who did complain formally, around half (54 per cent) felt satisfied with their institution’s handling of it – which means nearly half didn’t. So if you’re a student considering whether to raise a complaint, and you believe there’s roughly a 50-50 chance it won’t be handled satisfactorily, if you’ve already concluded there’s a strong likelihood it won’t change anything anyway, why would you bother?

Especially when you add in the other barriers – concern it might affect grades or relationships with staff, feeling intimidated or worried about consequences, lack of trust in the university to handle it fairly.

The focus groups reinforce the picture of systematic dismissal. One undergraduate explained the calculation:

If you were unhappy with your course, I don’t know how you’d actually say to them, ‘I want my money back, this was rubbish,’ basically. I don’t think that they would actually do that. It would just be a long, drawn-out process and they could just probably just argue for their own sake that your experience was your experience, other students didn’t agree, for example, on your course.

That’s someone that has already mapped out in their head exactly how the institution would respond – they’d argue it’s subjective, other students were happy, your experience doesn’t represent a breach of contract. And, of course, they’re probably right.

An entitled generation

If students don’t believe complaining will achieve anything, part of the reason is that they don’t really understand what they’re entitled to expect in the first place. The research found that only 50 per cent of students said they understood and could describe their rights and entitlements as a student – which very much undermines the whole premise of students as empowered consumers able to hold institutions to account.

When asked how well informed they felt about various rights, the results were even worse. Only 32 per cent of students felt well informed about their right to fair and transparent assessment – the highest figure for any right listed. More than half (52 per cent) said they felt not that well informed or not at all informed about their right to receive compensation. You can’t assert rights you don’t know you have.

The focus groups then show just how fuzzy students’ understanding of “promises” really is. Participants found it difficult to identify what had been explicitly promised to them, with received ideas about higher education playing a significant role in shaping student expectations.

They could articulate areas where their experiences fell short – reduced contact hours, poor teaching quality, limited access to careers support – but struggled to identify where these amounted to broken promises.

One undergraduate captured this confusion as follows:

I personally think I do get what I was promised when I applied to university. Not like I’m an easy-going person or anything, but I do get what I need in the university, yes.

Notice the subtle shift from “promised” to “need” – the student can’t quite articulate what was promised, so they fall back on whether they’re getting what they need, which is a much vaguer and more subjective standard.

This matters a lot, because if you don’t know what you were promised, you can’t confidently assert that a promise has been broken. You might feel disappointed, you might think things should be better, but you can’t point to a specific commitment and say “you told me X and you’ve given me Y.”

Which means that even when students want to complain, they’re starting from a position of uncertainty about whether they have grounds to do so. It’s the perfect recipe for learned helplessness – you’re dissatisfied, but you’re not sure if you’re entitled to be dissatisfied, so you conclude it’s safer to just accept it.

The one clear exception? Doctoral students, who were confident they’d been promised the support of a supervisor:

When I was applying for a PhD, I applied to several universities, so I was selected and accepted in [Institution A] and [Institution B], but I decided to come to [Institution A] for the supervisor – he interviewed me, he sent me the acceptance letter.

Getting on the escalator

If the picture so far suggests a system where students lack confidence in internal complaints processes, the findings on external avenues for redress make sense. Only 8 per cent of all students had heard of the Office of the Independent Adjudicator (OIAHE), and the focus groups confirm there was “little to no awareness of external organisations or avenues of redress for students”.

More broadly, more than a third (35 per cent) of students said they were unaware of any of the external organisations or routes listed through which students in England can raise complaints about their university – rising to 41 per cent among undergraduates and 38 per cent among domestic students. The list they were shown included the OIA, the OfS, Citizens Advice, solicitors, local MPs, the QAA, and trade unions or SUs like NUS. More than a third couldn’t identify a single one of these as somewhere you might go with a concern about your university.

As for OfS itself, just 18 per cent of students overall had heard of it, falling to 14 per cent among undergraduates. Let’s go ahead and assume that they’ve not read Condition B2.

When asked where they would go for information about their rights, the most common answer was the university website (53 per cent) or just searching online (51 per cent). About 42 per cent said they’d look to their SU for information about rights. That’s positive – SUs are meant to provide independent advice and advocacy for students. But the fact that only 42 per cent think to go there, versus 53 per cent who’d go to the university website, suggests SUs aren’t being seen as the first port of call.

Among postgraduates in the focus groups, there was “limited interest in the use of these avenues for redress”, with the implicit sense that if intra-institutional channels of redress seemed drawn-out, daunting and potentially fruitless, it was unlikely that “resorting to extra-institutional channels would make the situation better”. If students have concluded that internal processes are bureaucratic and ineffective, they’re not going to invest additional time and energy in external ones – especially when they don’t know those external routes exist in the first place.

Explorations

It’s an odd little bit of research in many ways. It’s hard to tell if recommendations have been deleted, or just weren’t asked for – either way, they’re missing. It’s also frustratingly divorced from OfS’ wider work on “treating students fairly” – I know from my own work over the decades that students tend initially to be overconfident about their rights knowledge, only to realise they’ve over or undercooked when you give them crunchier statements like these “prohibited behaviours” (which of course only seem to be “prohibited”, for the time being, in providers that will join the register in the future).

More curious is the extent to which OfS knows all of this already. Six years ago this board paper made clear that consumer protection arrangements were failing students on multiple fronts. It knew that information available to support student choice was inadequate – insufficiently detailed about matters that actually concern students and poorly structured for meaningful comparisons between providers and courses, with disadvantaged students and mature learners particularly affected by lack of accessible support and guidance.

It knew that the contractual relationship between students and providers remains fundamentally unequal, with ongoing cases of unclear or unfair terms that leave students uncertain about what they’re actually purchasing in terms of quality, contact time, support and costs, while terms systematically favoured providers.

It also knew that its existing tools weren’t allowing intervention even when it saw evidence that regulatory objectives were being delivered, and questioned whether a model requiring individual students to challenge providers for breaches was realistic or desirable.

So many things would help – recognition of the role of student advocacy, closer adjudication, better coordination between OfS and the OIA, banning NDAs for more than sexual misconduct are four that spring to mind, all of which should be underpinned by a proper theory of change that assumes that not all power over English HE is held in Westward House in Bristol.

If students have concluded that complaining is futile, there are really three possible responses. One would be to figure that the promises being made raise expectations too high. But there are so many actors specifically dedicated to not talking down a particular university or the sector in general as to render “tell them reality” fairly futile.

Another is to try to convince them they’re wrong – better communications about rights, clearer signposting of redress routes, more prominent information about successful complaints. You obviously can’t give that job to universities.

The third would be to ask what would need to change for complaining to actually be worthwhile. That would require processes that are genuinely quick and accessible, institutional cultures where raising concerns is welcomed rather than seen as troublemaking, meaningful remedies when things go wrong, and external oversight bodies that can intervene quickly and effectively.

But there’s no sign of any of that. A cynic might conclude that a regulator under pressure to help providers manage their finances might need to keep busy and look the other way while modules are slashed and facilities cut.

Why this matters more than it might seem

Over the years, people have asserted to me that students-as-consumers, or even the whole idea of student rights, is antithetical to the partnership between students and educators required to create learning and its outcomes.

“It’s like going to the gym”, they’ll say. “You don’t get fit just by joining”. Sure. But if the toilets are out of order or the equipment is broken, you’re not a partner then. The odd one will try it on. But most of them are perfectly capable of keeping two analogies in their head at the same time.

In reality, it’s not rights but resignation, when it becomes systematic, that corrodes the basis on which the student-university relationship is supposed to work. If students don’t believe they can hold institutions to account, then all the partnership talk in the world becomes hollow.

National bodies can write ever more detailed conditions about complaint processes, information provision, and student engagement. Universities can publish ever more comprehensive policies about policies and redress mechanisms. None of it matters if students have concluded that actually using those mechanisms is futile.

There’s something profoundly upsetting about a system where three-quarters of students believe promises haven’t been kept, but most conclude there’s no point complaining because nothing will change. It speaks to a deeper breakdown than just poor communications or inadequate complaints processes.

It’s precisely because students aren’t just consumers purchasing a service that we should worry. They’re participants in an institution that’s supposed to be about more than transactions. Universities ask students to trust them with years of their lives, substantial amounts of money (whether paid upfront by international students or through future loan repayments by domestic students), and significant life decisions about career paths and personal development.

In return, students are supposed to be able to trust that universities will deliver what they promise, listen when things go wrong, and be held accountable when they fail to meet their end of the deal.

The parallels with broader social contract failures are hard to miss. Just as students don’t believe complaining will change anything at their university, many young people don’t believe political engagement will change anything in society more broadly. Just as students have concluded that formal institutional processes are unlikely to deliver meaningful redress, many citizens have concluded that formal democratic processes are unlikely to deliver meaningful change.

The learned helplessness this research documents in higher education mirrors learned helplessness – which later turns to extremism – in civic life.

I don’t think I’ve ever heard of any uni willing to reimburse or cover if they’ve done a poor job of teaching. That’s never come to me.

They’re right.