A YouTube video about Spotify popped into my feed this weekend, and it’s been rattling around my head ever since.

Partly because it’s about music streaming, but mostly because it’s all about what’s wrong with how we think about student choice in higher education.

The premise runs like this. A guy decides to do “No Stream November” – a month without Spotify, using only physical media instead.

His argument, backed by Barry Schwartz’s paradox of choice research and a raft of behavioural economics, is that unlimited access to millions of songs has made us less satisfied, not more.

We skip tracks every 20 to 30 seconds. We never reach the guitar solo. We’re treating music like a discount buffet – trying a bit of everything but never really savouring anything. And then going back to the playlists we created earlier.

The video’s conclusion is that scarcity creates satisfaction. Ritual and effort (opening the album, dropping the needle, sitting down to actually listen) make music meaningful.

Six carefully chosen options produce more satisfaction than 24, let alone millions. It’s the IKEA effect applied to music – we value what we labour over.

I’m interested in choice. Notwithstanding the debate over what a “course” is, Unistats data shows that there were 36,421 of them on offer in 2015/16. This year that figure is 30,801.

That still feels like a lot, given that the University of Helsinki only offers 34 bachelor’s degree programmes.

Of course a lot of the entries on DiscoverUni separately list “with a foundation year” and there’s plenty of subject combinations.

But nevertheless, the UK’s bewildering range of programmes must be quite a nightmare for applicants to pick through – it’s just that once they’re on them, job cuts and switches to block teaching are delivering increasingly less choice in elective pathways than they used to.

We appear to have a system that combines overwhelming choice at the point of least knowledge (age 17, alongside A-levels, with imperfect information) with rigid narrowness at the point of most knowledge (once enrolled, when students actually understand what they want to study and why). It’s the worst of both worlds.

What the white paper promises

The government’s vision for improving student choice runs to a couple of paragraphs in the Skills White Paper, and it’s worth quoting in full:

We will work with UCAS, the Office for Students and the sector to improve the quality of information for individuals, informed by the best evidence on the factors that influence the choices people make as they consider their higher education options. Providing applicants with high-quality, impartial, personalised and timely information is essential to ensuring they can make informed decisions when choosing what to study. Recent UCAS reforms aimed at increasing transparency and improving student choice include historic entry grades data, allowing students, along with their teachers and advisers, to see both offer rates and the historic grades of previous successful applicants admitted to a particular course, in addition to the entry requirements published by universities and colleges.

As we see more students motivated by career prospects, we will work with UCAS and Universities UK to ensure that graduate outcomes information spanning employment rates, earnings and the design and nature of work (currently available on Discover Uni) are available on the UCAS website. We will also work with the Office for Students to ensure their new approach to assessing quality produces clear ratings which will help prospective students understand the quality of the courses on offer, including clear information on how many students successfully complete their courses.”

The implicit theory of change is straightforward – if we just give students more data about each of the courses, they’ll make better choices, and everyone wins. It’s the same logic that says if Spotify added more metadata to every track (BPM, lyrical themes, engineer credits), you’d finally find the perfect song. I doubt it.

Pump up the Jam

If the Department for Education (DfE) was serious about deploying the best evidence on the factors that influence the choices people make, it would know about the research showing that more information doesn’t solve choice overload, because choice overload is a cognitive capacity problem, not an information quality problem.

Sheena Iyengar and Mark Lepper’s foundational 2000 study in the Journal of Personality and Social Psychology found that when students faced 30 essay topic options versus six options, completion rates dropped from 74 per cent to 60 per cent, and essay quality declined significantly on both content and form measures. That’s a 14 percentage point completion drop from excessive choice alone, and objectively worse work from those who did complete.

A study on Jam showed customers were ten times more likely to buy when presented with six flavours rather than 24, despite 60 per cent more people initially stopping at the extensive display. More choice is simultaneously more appealing and more demotivating. That’s the paradox.

CFE Research’s 2018 study for the Office for Students (back when providing useful research for the sector was something it did) laid this all out explicitly for higher education contexts.

Decision making about HE is challenging because the system is complex and there are lots of alternatives and attributes to consider. Those considering HE are making decisions in conditions of uncertainty, and in these circumstances, individuals tend to rely on convenient but flawed mental shortcuts rather than solely rational criteria. There’s no “one size fits all” information solution, nor is there a shortlist of criteria that those considering HE use.

The study found that students rely heavily on family, friends, and university visits, and many choices ultimately come down to whether a decision “feels right” rather than rational analysis of data. When asked to explain their decisions retrospectively, students’ explanations differ from their actual decision-making processes – we’re not reliable informants about why we made certain choices.

A 2015 meta-analysis by Chernev, Böckenholt, and Goodman in the Journal of Consumer Psychology identified the conditions under which choice overload occurs – it’s moderated by choice set complexity, decision task difficulty, and individual differences in decision-making style. Working memory capacity limits humans to processing approximately seven items simultaneously. When options exceed this cognitive threshold, students experience decision paralysis.

Maximiser students (those seeking the absolute best option) make objectively better decisions but feel significantly worse about them. They selected jobs with 20 per cent higher salaries yet felt less satisfied, more stressed, frustrated, anxious, and regretful than satisficers (those accepting “good enough”). For UK applicants facing tens of thousands of courses, maximisers face a nearly impossible optimisation problem, leading to chronic second-guessing and regret.

The equality dimension is especially stark. Bailey, Jaggars, and Jenkins’s research found that students in “cafeteria college” systems with abundant disconnected choices “often have difficulty navigating these choices and end up making poor decisions about what programme to enter, what courses to take, and when to seek help.” Only 30 per cent completed three-year degrees within three years.

First-generation students, students from lower socioeconomic backgrounds, and students of colour are systematically disadvantaged by overwhelming choice because they lack the cultural capital and family knowledge to navigate it effectively.

The problem once in

But if unlimited choice at entry is a cognitive overload problem, what happens once students enrol should balance that with flexibility and breadth. Students gain expertise, develop clearer goals, and should have more autonomy to explore and specialise as they progress.

Except that’s not what’s happening. Financial pressures across the sector are driving institutions to reduce module offerings – exactly when research suggests students need more flexibility, not less.

The Benefits of Hindsight research on graduate regret says it all. A sizeable share of applicants later wish they’d chosen differently – not usually to avoid higher education, but to pick a different subject or provider. The regret grows once graduates hit the labour market.

Many students who felt mismatched would have liked to change course or university once enrolled – about three in five undergraduates and nearly two in three graduates among those expressing regret – but didn’t, often because they didn’t know how, thought it was too late, or feared the cost and disruption.

The report argues there’s “inherent rigidity” in UK provision – a presumption that the initial choice should stick despite evolving interests, new information, and labour-market realities. Students described courses being less practical or less aligned to work than expected, or modules being withdrawn as finances tightened. That dynamic narrows options precisely when students are learning what they do and don’t want.

Career options become the dominant reason graduates cite for wishing they’d chosen differently. But that’s not because they lacked earnings data at 17. It’s because their interests evolved, they discovered new fields, labour market signals changed, and the rigid structure gave them no way to pivot without starting again.

The Competition and Markets Authority now explicitly identifies as misleading actions “where an HE provider gives a misleading impression about the number of optional modules that will be available.” Students have contractual rights to the module catalogue promised during recruitment. Yet redundancy rounds repeatedly reduce the size and scope of optional module catalogues for students who remain.

There’s also an emerging consensus from the research on what actually works for module choice. An LSE analysis found that adding core modules within the home department was associated with higher satisfaction, whereas mandatory modules outside the home department depressed it. Students want depth and coherence in their chosen subject. They also value autonomous choice over breadth options.

Research repeatedly shows that elective modules are evaluated more positively than required ones (autonomy effects), and interdisciplinary breadth is associated with stronger cross-disciplinary skills and higher post-HE earnings when it’s purposeful and scaffolded.

What would actually work

So what does this all suggest?

As I’ve discussed on the site before, at the University of Helsinki – Finland’s flagship institution with 40,000 students – there’s 32 undergraduate programmes. Within each programme, students must take 90 ECTS credits in their major subject, but the other 75 ECTS credits must come from other programmes’ modules. That’s 42 per cent of the degree as mandatory breadth, but students choose which modules from clear disciplinary categories.

The structure is simple – six five-credit introductory courses in your subject, then 60 credits of intermediate study with substantial module choice, including proseminars, thesis work, and electives. Add 15 credits for general studies (study planning, digital skills, communication), and you’ve got a degree. The two “modules” (what we’d call stages) get a single grade each on a one-to-five scale, producing a simple, legible transcript.

Helsinki runs this on a 22.2 to one staff-student ratio, significantly worse than the UK average, after Finland faced €500 million in higher education cuts. It’s not lavishly resourced – it’s structurally efficient.

Maynooth University in Ireland reduced CAO (their UCAS) entry routes from about 50 to roughly 20 specifically to “ease choice and deflate points inflation.” Students can start with up to four subjects in year one, then move to single major, double major, or major with minor. Switching options are kept open through first year. It’s progressive specialisation – broad exploration early when students have least context, increasing focus as they develop expertise.

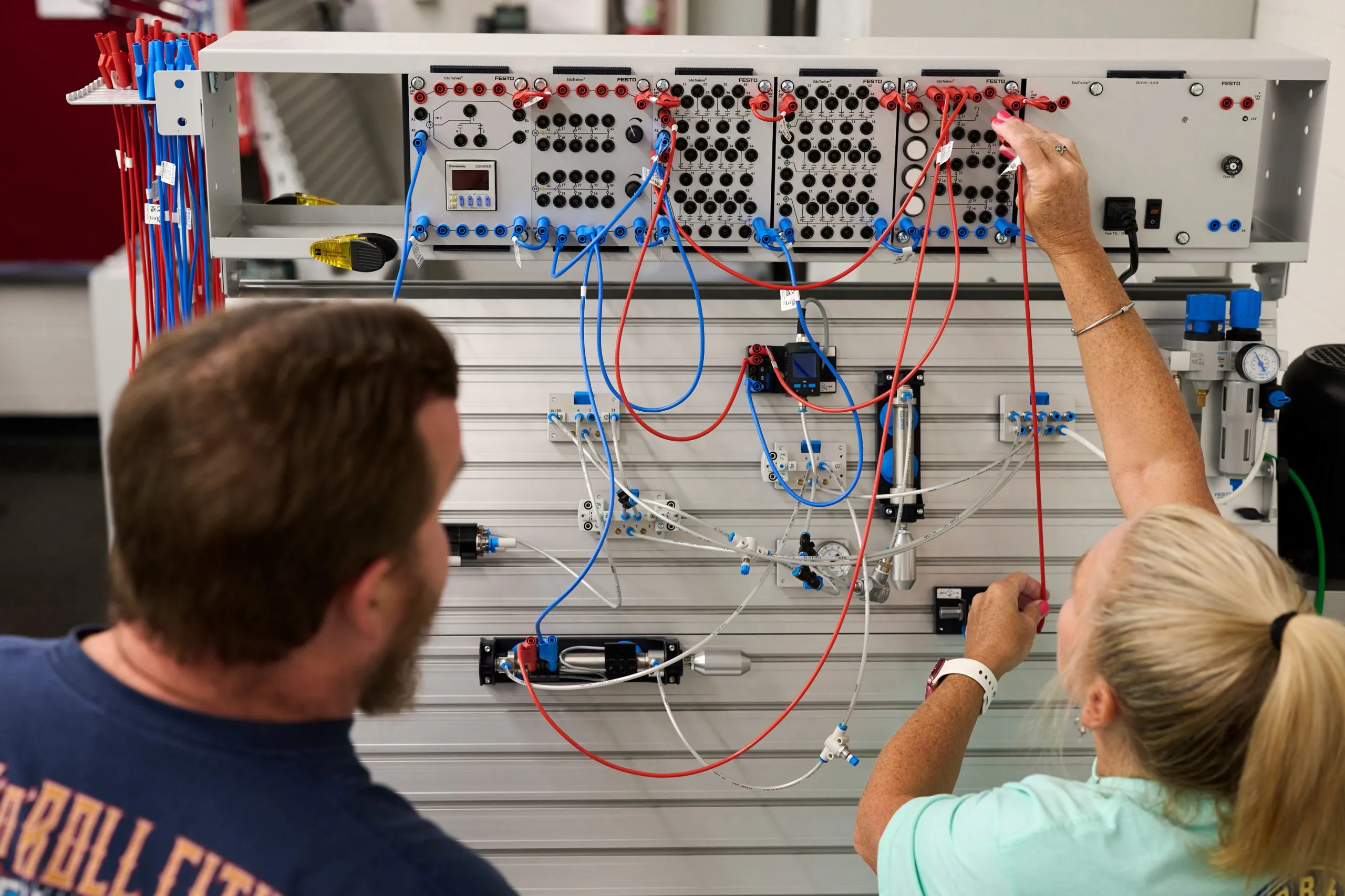

Also elsewhere on the site, Técnico in Lisbon – the engineering and technology faculty of the University of Lisbon – rationalised to 18 undergraduate courses following a student-led reform process. Those 18 courses contain hundreds of what the UK system would call “courses” via module combinations, but without the administrative overhead. They require nine ECTS credits (of 180) in social sciences and humanities for all engineering programmes because “engineers need to be equipped not just to build systems, but to understand the societies they shape.”

Crucially, students themselves pushed for this structure. They conducted structured interviews, staged debates, and developed reform positions. They wanted shared first years, fewer concurrent modules to reduce cognitive load, more active learning methods, and more curricular flexibility including free electives and minors.

The University of Vilnius allows up to 25 per cent of the degree as “individual studies” – but it’s structured into clear categories – minors (30 to 60 credits in a secondary field, potentially leading to double diploma), languages (20-plus options with specific registration windows), interdisciplinary modules (curated themes), and cross-institution courses (formal cooperation with arts and music academies). Not unlimited chaos, just structured exploration within categorical choices.

What all these models share is a recognition that you can have both depth and breadth, structure and flexibility, coherence and exploration – if you design programmes properly. You need roughly 60 to 70 per cent core pathway in the major for depth and satisfaction, 20 to 30 per cent guided electives organised into three to five clear categories per decision point, and maybe 10 to 15 per cent completely free electives.

The UK’s subject benchmark statements, if properly refreshed (and consolidated down a bit) could provide the regulatory infrastructure for it all. Australia undertook a version of this in 2010 through their Learning and Teaching Academic Standards project, which defined threshold learning outcomes for major discipline groupings through extensive sector consultation (over 420 meetings with more than 6,100 attendees). Those TLOs now underpin TEQSA’s quality regime and enable programme-level approval while protecting autonomy.

Bigger programmes, better choice

The white paper’s information provision agenda isn’t wrong – it’s just addressing the wrong problem at the wrong end of the process. Publishing earnings data doesn’t solve cognitive overload from tens of thousands of courses, quality ratings don’t help students whose interests evolve and who need flexibility to pivot, and historic entry grades don’t fix the rigidity that manufactures regret.

What would actually help is structural reform that the international evidence consistently supports – consolidation to roughly 20 to 40 programmes per institution (aligned with subject benchmark statement areas), with substantial protected module choice within those programmes, organised into clear categories like minors, languages, and interdisciplinary options.

Some of those groups of individual modules might struggle to recruit if they were whole courses – think music and languages. They may well (and across Europe, do) sustain research-active academics if they could exist in broader structures. Fewer, clearer programmes at entry when students have least context, and more, structured flexibility during the degree when students have expertise to choose wisely.

The efficiency argument is real – maintaining thousands of separate course codes, each with approval processes, quality assurance, marketing materials, and UCAS coordination is absurd overhead for what’s often just different permutations of the same modules. See also hundreds of “programme leaders” each having to be chased to fill a form in.

Fewer programme directors with more module convenors beneath them is far more rational. And crucially, modules serve multiple student populations (what other systems would call majors and minors, and students taking breadth from elsewhere), making specialist provision viable even with smaller cohorts.

The equality case is compelling – guided pathways with structured choice demonstrably improve outcomes for first-in-family students, students of colour, and low-income students, populations that regulators are charged with protecting. If current choice architecture systematically disadvantages exactly these students, that’s not pedagogical preference – it’s a regulatory failure.

And the evidence on what students actually want once enrolled validates it all – they value depth in their chosen subject, they want autonomous choice over breadth options (not forced generic modules), they benefit from interdisciplinary exposure when it’s purposeful, and they need flexibility to correct course when their goals evolve.

The white paper could have engaged with any of this. Instead, we get promises to publish more data on UCAS. It’s more Spotify features when what students need is a curated record collection and the freedom to build their own mixtape once they know what they actually like.

What little reform is coming is informed by the assumption that if students just had better search filters, unlimited streaming would finally work. It won’t.