Looking back on my lifelong history of learning experiences, the ones that I would rank as most effective and memorable were the ones in which the instructor truly saw me, understood my motivations and encouraged me to apply the learning to my own circumstances. This critical aspect of teaching and learning is included in most every meaningful pedagogical approach. We commonly recognize that the best practices of our field include a sensitivity to and understanding of the learner’s experiences, motivations and goals. Without responding to the learner’s needs, we will fall short of the common goal of internalizing whatever learning takes place.

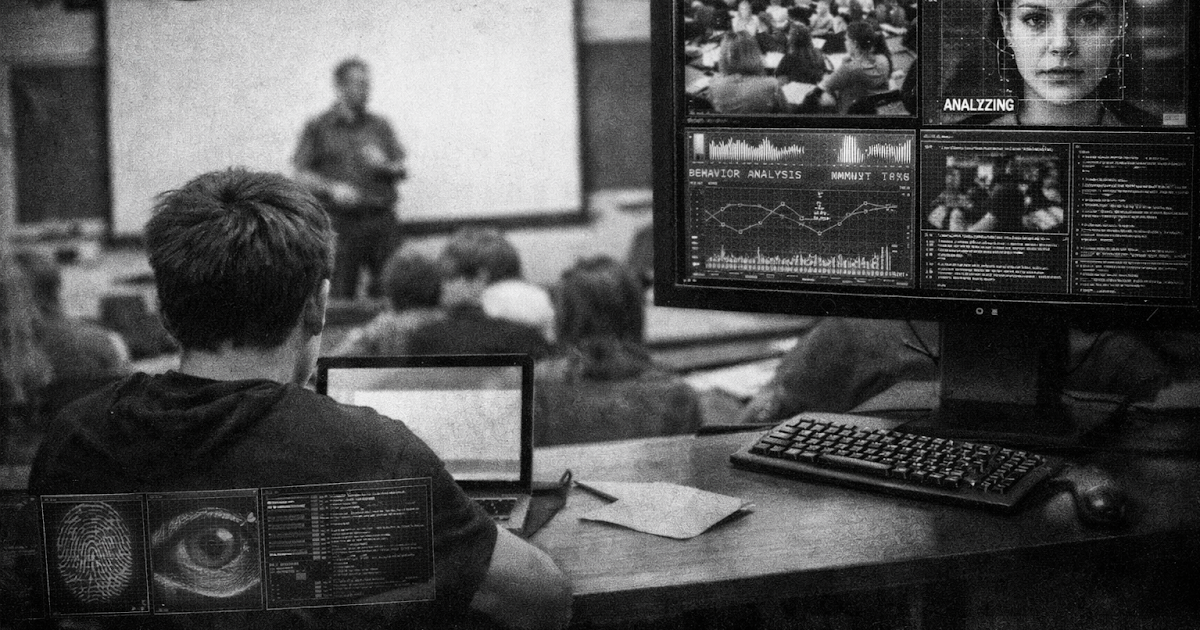

Some might believe that AI, as a computer-based system, merely addresses the facts, formulas and figures of quantitative learning rather than emotionally intelligent engagement with the learner. In its initial development that may have been true, however, AI has developed the ability to recognize and respond to emotional aspects of the learner’s responses.

In September 2024, the South-East Europe Design Automation, Computer Engineering, Computer Networks and Social Media Conference included research by four professors from the University of West Attica in Egaleo, Greece—Theofanis Tasoulas, Christos Troussas, Phivos Mylonas and Cleo Sgouropoulou—titled “Affective Computing in Intelligent Tutoring Systems: Exploring Insights and Innovations.” The authors described the importance of including affective engagement into developing learning systems:

“Integrating intelligent tutoring systems (ITS) into education has significantly enriched personalized learning experiences for students and educators alike. However, these systems often neglect the critical role of emotions in the learning process. By integrating affective computing, which empowers computers to recognize and respond to emotions, ITS can foster more engaging and impactful learning environments. This paper explores the utilization of affective computing techniques, such as facial expression analysis and voice modulation, to enhance ITS functionality. Case studies and existing systems have been scrutinized to comprehend design decisions, outcomes, and guidelines for effective integration, thereby enhancing learning outcomes and user engagement. Furthermore, this study underscores the necessity of considering emotional aspects in the development and deployment of educational technology to optimize its influence on student learning and well-being. A major conclusion of this research is that integration of affective computing into ITS empowers educators to customize learning experiences to students’ emotional states, thereby enhancing educational effectiveness.”

In a special edition of the Journal of Education Sciences published in August 2024, Jorge Fernández-Herrero writes in a paper titled “Evaluating Recent Advances in Affective Intelligent Tutoring Systems: A Scoping Review of Educational Impacts and Future Prospects,”

“Affective intelligent tutoring systems (ATSs) are gaining recognition for their role in personalized learning through adaptive automated education based on students’ affective states. This scoping review evaluates recent advancements and the educational impact of ATSs, following PRISMA guidelines for article selection and analysis. A structured search of the Web of Science (WoS) and Scopus databases resulted in 30 studies covering 27 distinct ATSs. These studies assess the effectiveness of ATSs in meeting learners’ emotional and cognitive needs. This review examines the technical and pedagogical aspects of ATSs, focusing on how emotional recognition technologies are used to customize educational content and feedback, enhancing learning experiences. The primary characteristics of the selected studies are described, emphasizing key technical features and their implications for educational outcomes. The discussion highlights the importance of emotional intelligence in educational environments and the potential of ATSs to improve learning processes.”

Notably, agentic AI models have been assigned tasks to monitor and provide adaptations to respond to the changing emotions of learners. Tom Mangan wrote last month in an EdTech article titled “AI Agents in Higher Education: Transforming Student Services and Support,”

“Agents will be able to gather data from multiple sources to assess a student’s progress across multiple courses. If the student starts falling behind, processes could kick in to help them catch up. Agents can relieve teachers and administrators from time-consuming chores such as grading multiple-choice tests and monitoring attendance. The idea is catching on. Andrew Ng, co-founder of Coursera, launched a startup called Kira Learning to ease burdens on overworked teachers. ‘Kira’s AI tutor works alongside teachers as an intelligent co-educator, adapting in real-time to each student’s learning style and emotional state,’ Andrea Pasinetti, Kira Learning’s CEO, says in an interview with The Observer.”

We are no longer limited to transactional chatbots that respond to questions from students without regard to their background, whether that be academic, experiential or even emotional. Using the capabilities of advanced AI, our engagements can analyze, identify and adapt to a range of learner emotions. These components are often the hallmark of excellent, experienced faculty members who do not teach only to the median of the class but instead offer personalized responses to meet the interests and needs of individual students.

As we look ahead to the last half of this semester, and succeeding semesters, we can expect that enhanced technology will enable us to better serve our learners. We will be able to identify growing frustration where that may be the case or the opportunity to accelerate the pace of the learning experience when learners display comfort with the learning materials and readiness to advance at their own pace ahead of others in the class.

We all recognize that this field is moving very rapidly. It is important that we have leaders at all levels who are prepared to experiment with the emergent technologies, demonstrate their capabilities and lead discussions on the potential for implementations. The results can be most rewarding, with a higher percentage of learners more comfortably reaching their goals. Are you prepared to take the lead in demonstrating these technologies to your colleagues?