Elon Musk’s days with DOGE appear numbered—the unelected billionaire bureaucrat said Tuesday that his time spent leading the agency-gutting U.S. Department of Government Efficiency will “drop significantly” next month. As Tesla’s profits plummet, the world’s richest man faces opposition from both Trump administration officials and voters.

DOGE’s legacy remains unclear. Lawsuits are challenging its attempted cuts, including at the U.S. Education Department. Musk seems to have scaled back his planned overall budget savings from $1 or $2 trillion to $150 billion, and it’s unclear whether DOGE will achieve even that.

But something may outlive Musk’s DOGE: all the state iterations it has inspired, with legislators and governors borrowing or riffing off the name. Iowa’s Republican governor created the Iowa DOGE Task Force. Missouri’s GOP-controlled Legislature launched Government Efficiency Committees, calling them MODOGE on Musk’s X social media platform. Kansas lost the reference to the original doge meme when it went with COGE, for its Senate Committee on Government Efficiency.

But, as with the federal version, the jokey names for these state offshoots may belie the serious impact they could have on governments and public employees—including state higher education institutions and faculty.

To take perhaps the most glaring example, the sweeping requests from the Florida DOGE team, which is led by a former federal Department of Transportation inspector general, have alarmed scholars.

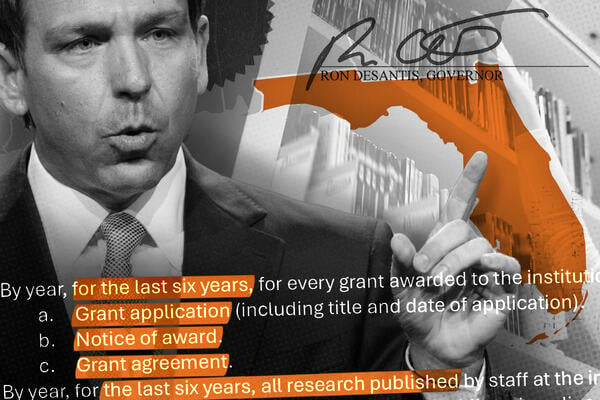

Earlier this month, the Florida DOGE asked public college and university presidents to provide an account—by the end of last week—of “all research published by staff” over the last six years, including “Papers and drafts made available to the public or in online academic repositories for drafts, preprints, or similar materials.”

“If not contained therein, author’s name, title, and position at the institution” must be provided, according to the letters the presidents received. The letters didn’t say what this and other requests were for.

The Florida DOGE also requested information on all grants awarded to institutions over the last six years, asking for each institution’s policy on allocating grants “for purposes of indirect cost recovery, including procedures for calculation.” Further, it requested an account of “all filled and vacant positions held by any employee with a non-instructional role.”

By the end of April, Florida’s public institutions must also provide the “Length of research associated” with each research publication, funding sources associated with the research and any “publications about the research” from the researcher or institution. In addition, the state DOGE is requesting funding sources for each institution’s noninstructional positions and the names of the nonstudent employees administering the grants.

And that may not be the end of the DOGE demands. In a March 26 letter, the state DOGE team told college presidents that it will conduct site visits “to ensure full compliance” with the governor’s executive order that created it, “as well as existing Florida law.” It said it may in the future request various other information, including course descriptions, syllabi, “full detail” on campus centers and the required end of diversity, equity and inclusion activities.

The requests so far from the Florida DOGE are the latest in a string of state actions that faculty say threaten to infringe on, or have already reduced, academic freedom. Dan Saunders, lead negotiator for the United Faculty of Florida union at Florida International University and a tenured associate professor of higher education, expressed concerns about what he called a “continuation of a chilling effect on faculty in terms of what we research and publish.”

“The lack of any meaningful articulation as to why they’re looking for this data and what they’re going to do with it just adds to the suspicions that I think the state has earned from the faculty,” Saunders said. “It’s clear that this is part of a broader and multidimensional attack” on areas of scholarship such as women’s and gender studies—part of a “comprehensive assault” on the “independence of the university,” he said.

“If Florida DOGE is following the patterns of the federal DOGE, then I think we can expect some radical oversimplifications of nuanced data and some cherry picking” of texts that an “unsophisticated AI will highlight,” he said. Noting how much research is published over six years, he questioned “how anyone is supposed to engage meaningfully” with that much information.

David Simmons, president of the University of South Florida’s Faculty Senate and a tenured engineering professor, said many faculty are “reasonably” concerned that this request is part of an effort to target “certain ideas that are disfavored by certain politicians.” Simmons—who stressed that he’s not speaking on behalf of the Senate or his institution—said such targeting would be “fundamentally un-American and inconsistent with the mission of a public university.”

“We hope that’s not happening. We hope this is just an inefficient effort to collect data,” Simmons said. He noted that much of the research information that the Florida DOGE is requesting is already publicly available on Google Scholar, an online database with profiles on faculty across the country.

“Universities are being required to reproduce information that’s already freely available in some cases, and to do that they’re using considerable resources and manpower,” Simmons said. The initial two-week data request was “so large as to be nearly impossible” to fulfill, he added.

A State University System of Florida spokesperson deferred comment to the DOGE team, which didn’t respond to Inside Higher Ed’s requests for an interview or provide answers to written questions Thursday. A spokesperson for the Florida Department of Education, which includes the Florida College System, deferred comment to Republican governor Ron DeSantis’s office, which responded via email but didn’t answer multiple written questions.

“In alignment with previous announcements and correspondence with all 67 counties, 411 municipalities, and 40 academic institutions the Florida DOGE Task Force aims to eliminate wasteful spending and cut government bloat,” a DeSantis spokesperson wrote. “If waste or abuse is identified during our collaborative efforts with partnering agencies and institutions, each case will be handled accordingly.”

‘DOGE Before DOGE Was Cool’

When Donald Trump returned to the White House in January and announced DOGE’s creation, he suggested it was an effort to cut the alleged waste his Democratic predecessor had allowed to fester. But DeSantis—who lost to Trump in the GOP presidential primary—launched his own DOGE in a state that he’s been leading for six years.

“Florida was DOGE before DOGE was cool,” DeSantis posted on X Feb. 24. (His actions in higher education have, in many ways, presaged what Trump is now doing nationally.)

So, perhaps not surprisingly, DeSantis’s executive order creating the Florida DOGE that day began by saying the state already has a “strong record of responsible fiscal management.” A list of rosy financial stats followed before DeSantis finally wrote, “Notwithstanding Florida’s history of prudent fiscal management relative to many states in the country, the State should nevertheless endeavor to explore opportunities for even better stewardship.”

“The State of Florida should leverage cutting edge technology to identify further spending reductions and reforms in state agencies, university bureaucracies, and local governments,” DeSantis wrote, echoing, at least in language, the tech-focused approach of the federal DOGE.

He established the DOGE team within the Executive Office of the Governor, tasking it in part to work with the statewide higher education agencies to “identify and eliminate unnecessary spending, programs, courses, staff, and any other inefficiencies,” including “identifying and returning unnecessary federal grant funding.” The executive order says state agencies must set up their own DOGE teams, which will identify grants “that are inconsistent with the policies of this State and should be returned to the American taxpayer in furtherance of the President’s DOGE efforts.”

This executive order expires about a year from now. In an emailed statement, Teresa M. Hodge, the statewide United Faculty of Florida union president, said the request for faculty publication records “is not about transparency or accountability; it is about control.”

“Our members should not be forced to defend their scholarship, or their silence, in a political witch hunt,” Hodge said. “We stand united in ensuring that Florida’s faculty are free to teach, conduct research, and to speak without fear of retaliation.”