Financial pressures across the higher education sector have necessitated a closer look at the various incomes and associated costs of the research, teaching and operational streams. For years, larger institutions have relied upon the cross-subsidy of their research, primarily from overseas student fees – a subsidy that is under threat from changes in geopolitics and indeed our own UK policies on immigration and visa controls.

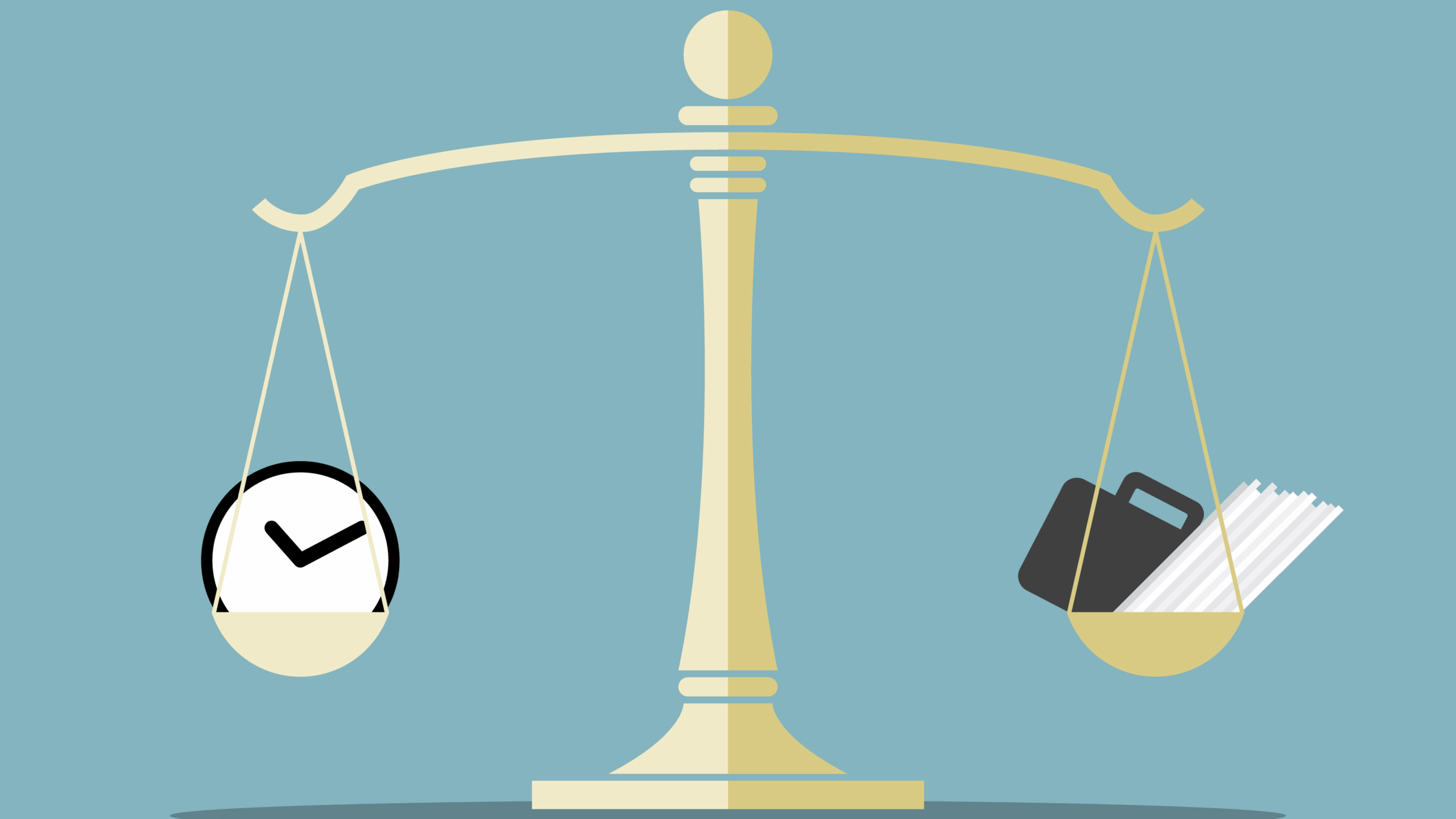

The UK is now between a rock and a hard place: how can it support the volume and focus of research needed to grow the knowledge-based economy of our UK industrial strategy, while also addressing the financial deficits that even the existing levels of research create?

Several research leaders have recently been suggesting that a more efficient research system is one where higher education institutions focus on their strengths and collaborate more. But while acknowledging that efficiency savings are required and the relentless growth of bureaucracy – partly imposed by government but also self-inflicted within the HEIs – can be addressed, the funding gulf is far wider than these savings could possibly deliver.

Efficiency savings alone will not solve the scale of structural deficits in the system. Furthermore, given that grant application success rates are systemically below 20 per cent and frequently below ten or even five per cent, the sector is already only funding its strongest applications. Fundamentally, currently demand far outstrips supply, leading to inefficiency and poor prioritisation decisions.

Since most of the research costs are those supporting the salaries and student stipends of the researchers themselves, significant cost-cutting necessitates a reduction in the size of the research workforce – a reduction that would fly in the face of our future workforce requirement. We could leave this inevitable reduction to market forces, but the resulting disinvestment will likely impact the resource intensive subjects upon which much of our future economic growth depends.

We recognise also that solutions cannot solely rely upon the public purse. So, what could we do now to improve both the efficiency of our state research spend and third-party investment into the system?

What gets spent

First of all, the chronic underfunding of the teaching of UK domestic students cannot continue, as it puts even further pressure on institutional resources. The recent index-linking of fees in England was a brave step to address this, but to maintain a viable UK research and innovation system, the other UK nations will also urgently need to address the underfunding of teaching. And in doing so we must remain mindful of the potential unintended consequences that increased fees might have on socio-economic exclusion.

Second, paying a fair price for the research we do. Much has been made of the seemingly unrestricted “quality-related” funding (QR, or REG in Scotland) driven by the REF process. The reality is that QR simply makes good the missing component of research funding which through TRAC analysis is now estimated to cover less than 70 per cent of the true costs of the research.

It ought to be noted that this missing component exists over all the recently announced research buckets extending across curiosity-driven, government-priority, and scale-up support. The government must recognise that QR is not purely the funding of discovery research, but rather it is the dual funding of research in general – and that the purpose of dual funding is to tension delivery models to ensure HEI efficiency of delivery.

Next, there is pressing a need for UKRI to focus resource on the research most likely to lead to economic or societal benefit. This research spans all disciplines from the hardest of sciences to the most creative of the arts.

Although these claims are widely made within every grant proposal, perhaps the best evidence of their validity lies in the co-investment these applications attract. We note the schemes such as EPSRC’s prosperity partnerships and their quantum technology hubs show that when packaged to encompass a range of technology readiness levels (TRL), industry is willing to support both low and high TRL research.

We would propose that across UKRI more weighting is given to those applications supported by matching funds from industry or, in the case of societal impact, by government departments or charities. The next wave of matched co-funding of local industry-linked innovation should also privilege schemes which elicit genuine new industry investment, as opposed to in-kind funding, as envisaged in Local Innovation Partnership Funds. This avoids increasing research volume which is already not sustainable.

The research workforce

In recent times, the UKRI budgets and funding schemes for research and training (largely support for doctoral students) have been separated from each other. This can mean that the work of doctoral students is separated from the cutting-edge research that they were once the enginehouse of delivering. This decoupling means that the research projects themselves now require allocated, and far more expensive, post-doctoral staff to deliver. We see nothing in the recent re-branding of doctoral support to “landscape” and “focal” awards that is set to change this disconnect.

It should be acknowledged that centres for doctoral training were correctly introduced nearly 20 years ago to ensure our students were better trained and better supported – but we would argue that the sector has now moved on and graduate schools within our leading HEIs address these needs without need for duplication by doctoral centres.

Our proposal would be that, except for a small number of specific areas and initiatives supported by centres of doctoral training (focal awards) and central to the UK’s skills need, the normal funding of UKRI-supported doctoral students should be associated with projects funded by UKRI or other sources external to higher education institutions. This may require the reassignment of recently pooled training resources back to the individual research councils, rebalanced to meet national needs.

This last point leads to the question of what the right shape of the HEI-based research-focused workforce is. We would suggest that emphasis should be placed on increasing the number of graduate students – many of whom aspire to move on from the higher education sector after their graduation to join the wider workforce – rather than post-doctoral researchers who (regrettably) mistakenly see their appointment as a first step to a permanent role in a sector which is unlikely to grow.

Post-doctoral researchers are of course vital to the delivery of some research projects and comprise the academic researchers of the future. Emerging research leaders should continue to be supported through, for example, future research leader fellowships, empowered to pursue their own research ambitions. This rebalancing of the research workforce will go some way to rebalancing supply and demand.

Organisational change

Higher education institutions are hotbeds of creativity and empowerment. However, typical departments have an imbalanced distribution of research resources where appointment and promotion criteria are linked to individual grant income. While not underestimating the important leadership roles this implies, we feel that research outcomes would be better delivered through internal collaborations of experienced researchers where team science brings complementary skills together in partnership rather than subservience.

This change in emphasis requires institutions to consider their team structures and HR processes. It also requires funders to reflect these changes in their assessment criteria and selection panel working methods. Again, this rebalancing of the research workforce would go some way to addressing supply and demand while improving the delivery of the research we fund.

None of these suggestions represent a quick fix for our financial pressures, which need to be addressed. But taken together we believe them to be a supportive step, helping stabilise the financial position of the sector, while ensuring its continuing contribution to the UK economy and society. If we fail to act, the UK risks a disorderly reduction of its research capability at precisely the moment our global competitors are accelerating.