The autonomy of states in setting their own higher education policies creates a series of natural experiments across the United States, offering insights into what approaches work best in particular contexts. Given the importance of local considerations, there are few universal policy prescriptions that can be recommended with confidence. Sadly, this complexity was overlooked in Saul Geiser’s recent Inside Higher Ed essay entitled “Why the SAT Is a Poor Fit for Public Universities.”

My position is not that all, or even any, public universities should require standardized test scores. In fact, I share Geiser’s view that a university’s “mission shapes admission policy.” However, it is because of this principle that I contend that the SAT cannot be dismissed as a poor fit for public universities without considering how institutions operationalize their missions and define their institutional priorities.

Vertical Stratification Within a Public University System

In my view, Geiser’s argument is fundamentally flawed in his comparison of elite private institutions to public university systems, which often include an elite flagship campus alongside a broader range of institutions. Geiser’s comparison is particularly surprising given his long-standing association with the University of California system.

The California Master Plan for Higher Education has long been studied and celebrated for establishing a public postsecondary education system consisting of institutions with differentiated missions and admission processes. Under its original design, the community colleges provided open access to all high school graduates and adult learners, offering a stepping-stone to the four-year institutions. The California State University institutions admitted the top third of high school graduates, focusing on undergraduate education and teacher preparation. The University of California institutions were reserved for the top eighth of high school graduates and emphasized research and doctoral education.

By using high school class rank to sort students into the different tiers of the system, the Master Plan established a baseline for admissions to both UC and CSU institutions. This framework enabled the emergence of two elite public flagship campuses in Berkeley and Los Angeles that prioritized academic excellence alongside accessible undergraduate institutions in the CSU system that served as drivers of economic development and social mobility.

Reorienting the analysis to a comparison between elite public and private institutions would have provided a stronger basis for discussing selective admissions, as both of these institutional types receive far more applications than available spaces in their first-year cohorts. In these circumstances, institutions must make choices about how to differentiate among a pool of qualified applicants.

It is common to start with assessing an applicant’s academic achievement. In a competitive pool, this assessment is less about whether the applicant meets minimum academic standards of the university and more about how the applicant has achieved above and beyond other applicants to the same program or institution. In a competitive admission pool, academic excellence is often an important distinction, but it can be defined in different ways.

Assessing Academic Excellence

Many researchers agree that the use of both high school GPA and standardized test scores yields the most accurate assessment of academic potential, rather than relying on either measure alone. Geiser’s own research from 2002 shows that combining both high school GPA and test scores better predicted UC students’ first-year grades than just high school GPA alone. Therefore, I was surprised that he presented the use of GPAs and test scores in admission policies as mutually exclusive alternatives.

Although somewhat dated, a compelling finding from his 2002 analysis was that the combination of SAT Subject Test scores (discontinued in 2021) and high school GPA accounted for a greater proportion of variance in UC students’ first-year GPA than the combination of GPA and SAT scores. This finding suggests that precollege, discipline-specific achievement is important.

This should come as no surprise, as college curricula for artists, anthropologists and aeronautical engineers differ substantially. It is reasonable to expect that the predictors of success in these programs would also differ. As such, academic programs within universities may be well served by setting admission standards calibrated to the specific competencies of their respective disciplines—a portfolio for the artist, an academic paper for the anthropologist and a math exam for the engineer.

Although Geiser maintains that “academic standards haven’t slipped” at the UCs since they went test-free four years ago, a recent Academic Senate report from the University of California, San Diego, revealed that about one in eight first-year students this fall did not meet high school math standards on placement exams despite having strong high school math grades—a nearly 30-fold increase since 2020—and about one in 12 did not even meet middle school standards. This mismatch between GPAs and scores on course placement exams underscores critics’ concerns about inflation of high school GPAs and undermines the reliability of GPAs as a sole marker of academic achievement. The authors of the report called for an investigation of grading standards across California high schools and recommended the UC system re-examine its standardized testing requirements.

It is understandable that faculty in quantitative disciplines, such as engineering and finance, would want to better gauge the readiness of applicants for their programs by considering test scores, if only the results from the SAT or ACT math sections, in light of these findings. However, if one in 12 students are not meeting middle school math standards, then the greater concern is that these students, regardless of major, will require remediation, creating longer, more expensive and more difficult paths to graduation.

Variation in Standardized Testing Requirements Across States

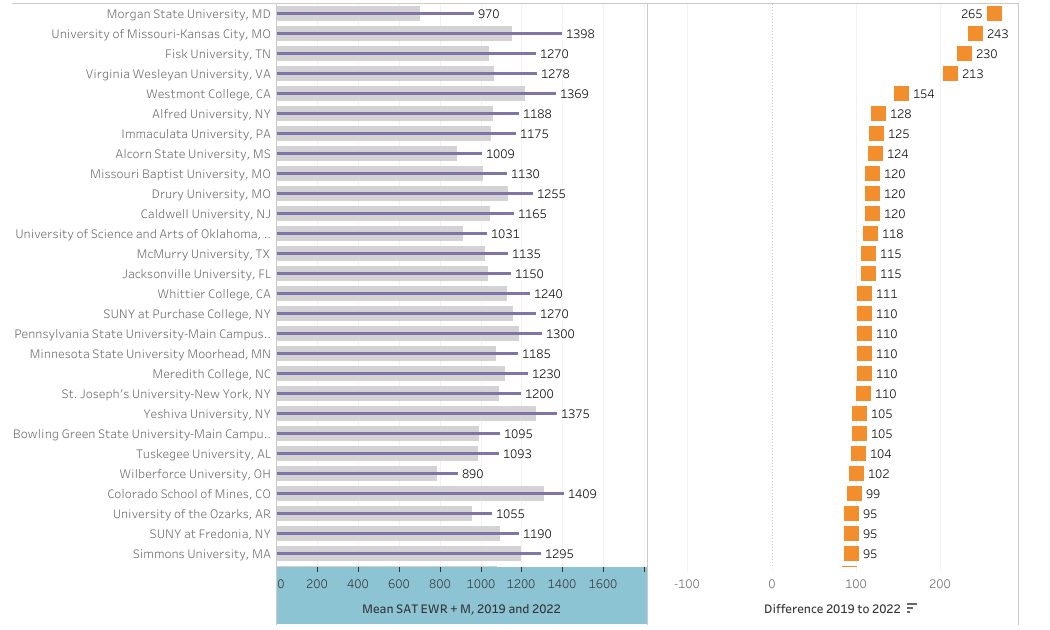

I was surprised Geiser did not acknowledge this report, instead arguing that the reinstatement of standardized test requirements at Ivy League institutions “provided intellectual cover for the SAT’s possible revival” nationwide. This characterization overlooks the fact that some public institutions in at least 11 states—Alabama, Arkansas, Florida, Georgia, Indiana, Louisiana, Mississippi, Ohio, Tennessee, Texas and West Virginia —already require standardized test scores in admission, according to the College Board. Notably, Florida public universities never suspended their test requirements during the COVID pandemic when all of the Ivies did.

In Georgia and Tennessee, public universities waived test requirements during the COVID pandemic but subsequently moved to reinstate the requirements for the University of Tennessee system and for at least seven of the 26 institutions in the University System of Georgia, including the Georgia Institute of Technology and the University of Georgia.

Among public universities in Texas and Ohio, only the states’ flagships, the University of Texas at Austin and the Ohio State University, reinstated standardized test requirements for all students. While the flagship in Indiana remains test optional, the state’s premier land-grant institution requires test scores—Purdue University reinstated the requirement in 2024. And in Alabama, both the land-grant, Auburn University, and the flagship, the University of Alabama at Tuscaloosa, have announced plans to reinstate required test scores for all first-year applicants.

In some states, public institutions, including Southern Arkansas University, Fairmont State University in West Virginia and Alcorn State University, a historically Black institution in Mississippi, waive test requirements for students with higher GPAs. In practice, this approach prioritizes performance in the classroom but offers low-performing high school students a second chance to demonstrate their proficiency and potential.

These examples show how variations in admission practices across institutions enable public systems to pursue their missions and diverse sets of state goals that may not be possible for any single institution within their system. These systems can offer broad access to four-year programs while also upholding academic standards and pursuing academic excellence. Whether that means all, some or none of the institutions in a public system require the SAT or ACT depends on the goals and strategies of each of the states.

While most public institutions adopted test-optional admissions during the pandemic, California implemented a test-blind policy that prohibited the consideration of test scores. Based on my experience as an admission officer, I applaud this decision. Test-optional admission is an easy policy decision, but I have seen how test-optional policies can create two different admission processes, where test scores are essentially required for some groups of students and not for others. Test-optional policies muddy the waters, offering less transparency in an already complicated process. The UC and CSU systems avoided this mistake by establishing equal grounds for evaluating applicants, but this does not mean that other public institutions need to do the same.

Aligning Admissions With Mission

Public universities are facing numerous enrollment pressures. Shifting state and regional demographics continue to force admission leaders to adjust their recruitment strategies and admission policies. The growing prominence of artificial intelligence appears apt to redefine the academic experience and admission processes, but exactly when and how are unknown. Meanwhile, the expected increase in states’ financial obligations in relation to Medicaid is likely to increase reliance on tuition revenue, which will ultimately shape the budgets and enrollments of higher education institutions.

A uniform, one-size-fits-all approach to admissions policy, such as test-blind admissions for all public universities, does not respect the autonomy of states and institutions and does not serve the diversity of institutional contexts. Public universities should continue to tailor admissions policies to their specific needs, which may include variation across campuses within a public system or even among programs within the same institution. What matters most is that admission policies remain transparent, are applied consistently to all applicants within a program and closely align with the institution’s mission and public purpose.