Get stories like this delivered straight to your inbox. Sign up for The 74 Newsletter

A new national study shows that Americans’ rates of reading for pleasure have declined radically over the first quarter of this century and that recreational reading can be linked to school achievement, career compensation and growth, civic engagement, and health. Learning how to enjoy reading – not literacy proficiency – isn’t just for hobbyists, it’s a necessary life skill.

But the conditions under which English teachers work are detrimental to the cause – and while book bans are in the news, the top-down pressure to measure up on test scores is a more pervasive, more longstanding culprit. Last year, we asked high school English teachers to describe their literature curriculum in a national questionnaire we plan to publish soon. From responses representing 48 states, we heard a lot of the following: “soul-deadening”; “only that which students will see on the test” and “too [determined] by test scores.”

These sentiments certainly aren’t new. In a similar questionnaire distributed in 1911, teachers described English class as “deadening,” focused on “memory instead of thinking,” and demanding “cramming for examination.”

Teaching to the test is as old as English itself – as a secondary school subject, that is. Teachers have questioned the premise for just as long because too many have experienced a radical disconnect between how they are asked or required to teach and the pleasure that reading brings them.

High school English was first established as a test-driven subject around the turn of the 20th Century. Even at a time when relatively few Americans attended college, English class was oriented around building students’ mastery of now-obscure literary works that they would encounter on the College Entrance Exam.

The development of the Scholastic Aptitude Test in 1926 and the growth of standardized testing since No Child Left Behind have only solidified what was always true: As much as we think of reading as a social, cultural, even “spiritual” experience, English class has been shaped by credential culture.

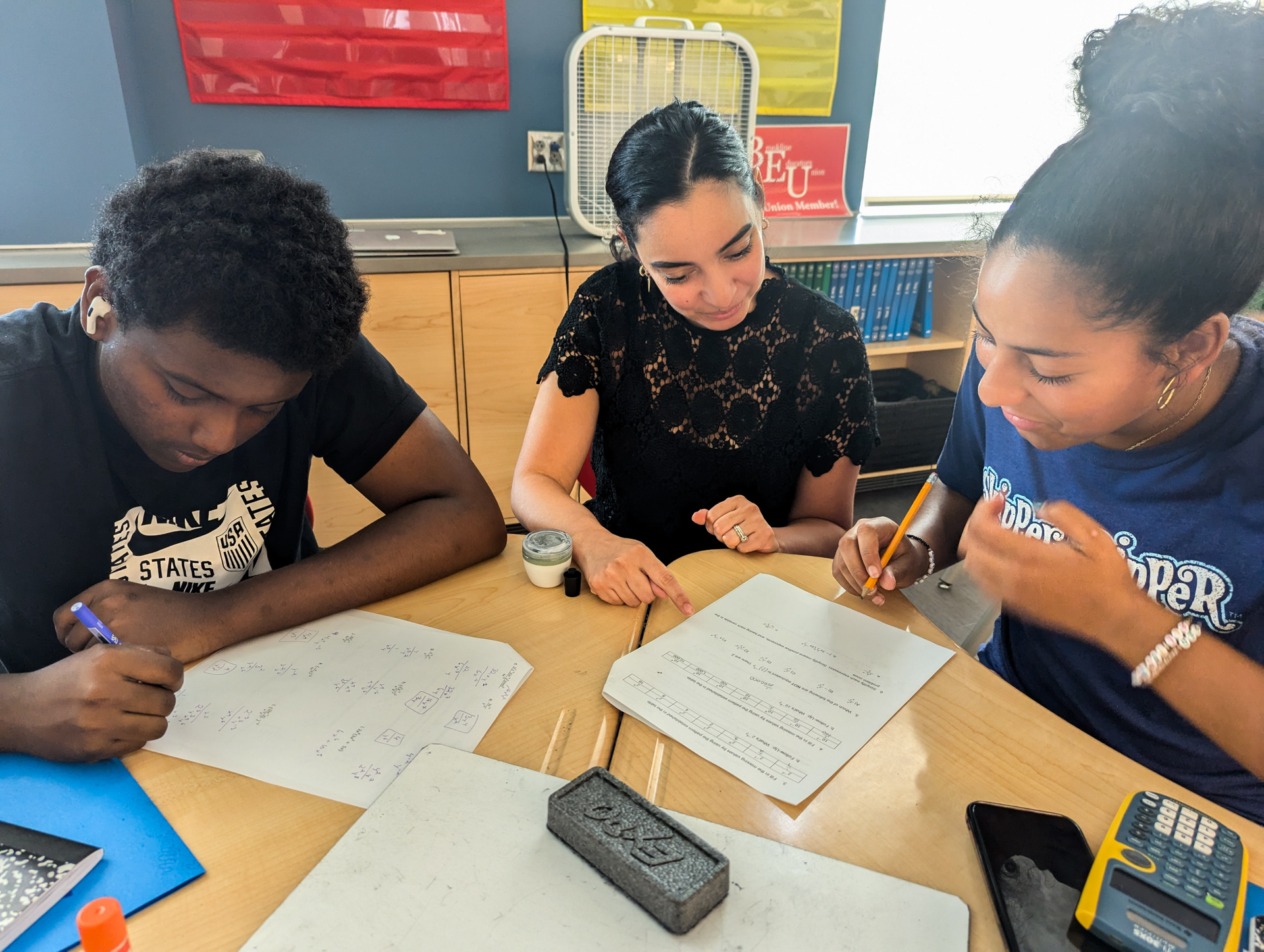

Throughout, many teachers felt that preparing students for college was too limited a goal; their mission was to prepare students for life. They believed that studying literature was an invaluable source of social and emotional development, preparing adolescents for adulthood and for citizenship. It provided them with “vicarious experience”: Through reading, young people saw other points of view, worked through challenging problems, and grappled with complex issues.

Indeed, a national study conducted in 1933 asked teachers to rank their “aims” in literature instruction. They listed “vicarious experience” first, “preparation for college” last.

The results might not look that different today. Ask an English teacher what brought her to the profession, and a love of reading is likely to top the list. What is different today is the unmatched pressure to prepare students for a constant cycle of state and national examinations and for college credentialing.

Increasingly, English teachers are compelled to use online curriculum packages that mimic the examinations themselves, composed largely of excerpts from literary and “informational” texts instead of the whole books that were more the norm in previous generations. “Vicarious experience” has less purchase in contemporary academic standards than ever.

Credentialing, however, does not equal preparing. Very few higher education skills map neatly onto standardized exams, especially in the humanities. As English professors, we can tell you that an enjoyment of reading – not just a toleration of it – is a key academic capacity. It produces better writers, more creative thinkers, and students less likely to need AI to express their ideas effectively.

Yet we haven’t given K-12 teachers the structure or freedom to treat reading enjoyment as a skill. The data from our national survey suggests that English teachers and their students find the system deflating.

“Our district adopted a disjointed, excerpt-heavy curriculum two years ago,” a Washington teacher shared, “and it is doing real damage to students’ interest in reading.”

From Tennessee, a teacher added: “I understand there are state guidelines and protocols, but it seems as if we are teaching the children from a script. They are willing to be more engaged and can have a better understanding when we can teach them things that are relatable to them.”

And from Oregon, another tells us that because “state testing is strictly excerpts,” the district initially discouraged “teaching whole novels.” It changed course only after students’ exam scores improved.

Withholding books from students is especially inhumane when we consider that the best tool for improved academic performance is engagement – students learn more when they become engrossed in stories. Yet by the time they graduate from high school, many students master test-taking skills but lose the window for learning to enjoy reading.

Teachers tell us that the problem is not attitudinal but structural. An education technocracy that consists of test making agencies, curriculum providers, and policy makers is squeezing out enjoyment, teacher autonomy and student agency.

To reverse this trend, we must consider what reading experiences we are providing our students. Instead of the self-defeating cycle of test-preparation and testing, we should take courage, loosen the grip on standardization, and let teachers recreate the sort of experiences with literature that once made us, and them, into readers.

Get stories like these delivered straight to your inbox. Sign up for The 74 Newsletter