In a study of 99,542 donors who supported causes between 2020 and 2024 with gifts totaling less than $5,000 a year (nearly 97 percent of all donors), just 5.7 percent supported education institutions. That’s according to “The Generosity Report: Data-Backed Insights for Resilient Fundraising,” published this April by Neon One, a provider of nonprofit operational technology.

While major gifts will always be a crucial part of higher ed advancement success, it’s important to remember that smaller gifts add up, and during this uncertain time higher ed leaders must prioritize capturing the interest of every could-be donor.

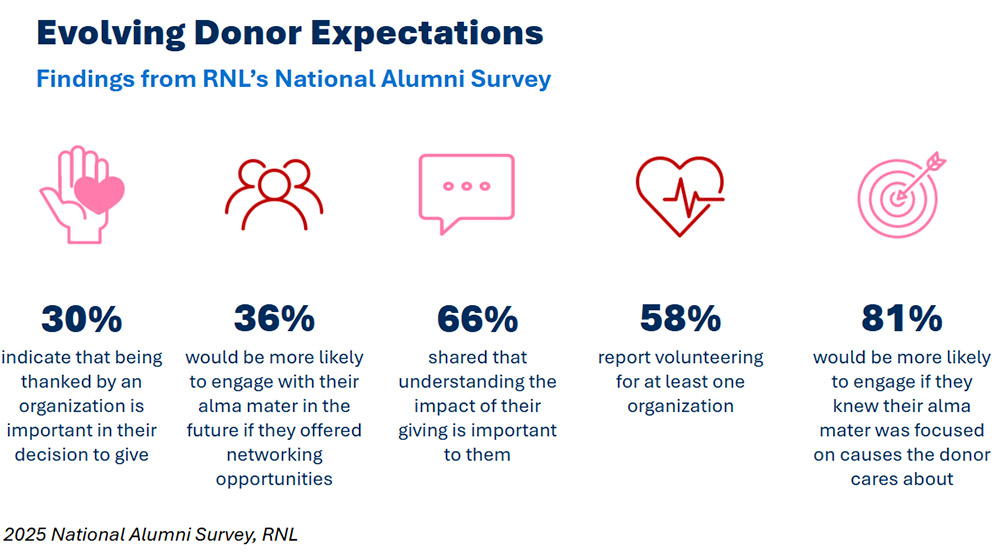

Among the donors studied, nearly 30 percent gave to more than one nonprofit, for a combined average of about $545 in 2024, an increase from $368 in 2020. Many—if they have a good relationship with a nonprofit and are asked—will increase their level of support.

Sharing donation impact stories on a college’s main giving page is an approach not taken enough, in my experience. Typically, the “make a gift” or “give now” link, found prominently on an institution’s homepage, brings a visitor to a form. (“Yes, we’ll take that credit card info now.”) While no one wants to distract anyone from giving online, some colleges are clearly making efforts to inspire and inform giving by sharing how donations are helping students succeed and contributing to research and other efforts benefiting the community or beyond.

In uncovering examples of colleges using engaging narratives on their donation pages, I now have a clear sense of several practices to consider. For anyone asking how an already resource-strapped marketing and comms team is supposed to make time for additional storytelling, here’s some good news: Most institutions are probably already publishing articles that can be gently repurposed for alumni and other friends thinking about making a gift.

Following are three actions to take when the goal is telling impact stories on a main giving page.

- Find (and tweak) or create the content.

Do some sleuthing to locate any articles already written about programs and supports made possible at least in part with donor funding. While giving sites can include articles about major gifts that center around the donor, focusing on individuals or communities that are better because of the initiative is more compelling.

Donation-related video content—although probably needing to be built from scratch—is a great way to highlight real student successes, whether it’s a scholarship that opened up access, emergency funds that allowed a student to stay in school or the excitement of commencement. Minnesota State University, Mankato, recorded accepted students finding out they had received scholarships and students who had benefited from emergency funding sharing how the gift had “saved the day.” Gratitude-focused videos, especially when they use student voices, need not reveal specific personal circumstances.

To help find individuals to feature, some giving pages invite students, alumni, employees and donors to suggest or contribute their own impact stories.

- Provide a mix of content formats and collections.

Slideshows, blown-up quotes and infographics (individual graphics or numbers-driven stories) are a few visual content tactics spotted on giving pages.

Lewis & Clark College tells succinct stories on its giving page through a slideshow with three students sharing how their financial aid offers allowed them to enroll. The Oregon college’s giving page also includes a collection of five featured stats, highlighting how gifts from the past year have made an “immediate impact on the areas of greatest need.” Rather than just presenting the most obvious numbers, such as giving totals, these data points note, for example, the number of potential jobs and internships sourced by the career center, or how many new titles were purchased by the library.

When strategizing about giving page content, consider a series of similar stories that use a standard title or title style. These can even be short first-person pieces, such as “The next decade of [community, achievement, opportunity, gratitude, etc.] begins with you,” a series created for McGill University in Montreal.

To make an impact story most effective at inspiring a gift, be sure to take the extra step of adding a call-to-action message and link within each article. Even institutions doing this tend to be inconsistent about it. Try adding an italicized note at the end, a sentence within the text or a box that explains the related fund, as University of Colorado at Boulder does.

- Be thoughtful about giving page content organization.

Content-rich giving pages don’t start with a gift form or a bunch of stories. Instead, a single large photo or slideshow featuring students and a short, impactful message seems to be the preferred approach.

As a visitor scrolls down, additional content tiers can offer more detail and giving encouragement—such as students expressing gratitude for support. More comprehensive feature articles and/or collections of impact story content tend to appear toward the bottom of the page, with a few teaser stories and often a link to see more. Larger collections of stories can be broken into categories and made searchable.

Some institutions place links to impact story pages in more than one place and include multiple “make a gift” buttons on the main giving page.

A new focus on giving content should also trigger some tweaks to the gift form itself. Does it include a drop-down menu with specific fund options? Can a donor write in where to direct the gift?

Or consider McGill’s approach: Each of five big ideas listed on its giving stories page takes visitors to a gift form, followed by a description of the meaning of that idea, followed by specific stories that bring it to life.

What inspirational success stories could you be sharing with friends who click to donate?