During the Empower Learners for the Age of AI (ELAI) conference earlier in December 2022, it became apparent to me personally that not only does Artificial intelligence (AI) have the potential to revolutionize the field of education, but that it already is. But beyond the hype and enthusiasm there are enormous strategic policy decisions to be made, by governments, institutions, faculty and individual students. Some of the ‘end is nigh’ messages circulating on Social Media in the light of the recent release of ChatGPT are fanciful click-bait, some however, fire a warning shot across the bow of complacent educators.

It is certainly true to say that if your teaching approach is to deliver content knowledge and assess the retention and regurgitation of that same content knowledge then, yes, AI is another nail in that particular coffin. If you are still delivering learning experiences the same way that you did in the 1990s, despite Google Search (b.1998) and Wikipedia (b.2001), I am amazed you are still functioning. What the emerging fascination about AI is delivering an accelerated pace to the self-reflective processes that all university leadership should be undertaking continuously.

AI advocates argue that by leveraging the power of AI, educators can personalize learning for each student, provide real-time feedback and support, and automate administrative tasks. Critics argue that AI dehumanises the learning process, is incapable of modelling the very human behaviours we want our students to emulate, and that AI can be used to cheat. Like any technology, AI also has its disadvantages and limitations. I want to unpack these from three different perspectives, the individual student, faculty, and institutions.

Get in touch with me if your institution is looking to develop its strategic approach to AI.

Individual Learner

For learners whose experience is often orientated around learning management systems, or virtual learning environments, existing learning analytics are being augmented with AI capabilities. Where in the past students might be offered branching scenarios that were preset by learning designers, the addition of AI functionality offers the prospect of algorithms that more deeply analyze a student’s performance and learning approaches, and provide customized content and feedback that is tailored to their individual needs. This is often touted as especially beneficial for students who may have learning disabilities or those who are struggling to keep up with the pace of a traditional classroom, but surely the benefit is universal when realised. We are not quite there yet. Identifying ‘actionable insights’ is possible, the recommended actions harder to define.

The downside for the individual learner will come from poorly conceived and implemented AI opportunities within institutions. Being told to complete a task by a system, rather than by a tutor, will be received very differently depending on the epistemological framework that you, as a student, operate within. There is a danger that companies presenting solutions that may work for continuing professional development will fail to recognise that a 10 year old has a different relationship with knowledge. As an assistant to faculty, AI is potentially invaluable, as a replacement for tutor direction it will not work for the majority of younger learners within formal learning programmes.

Digital equity becomes important too. There will undoubtedly be students today, from K-12 through to University, who will be submitting written work generated by ChatGPT. Currently free, for ‘research’ purposes (them researching us), ChatGPT is being raved about across social media platforms for anyone who needs to author content. But for every student that is digitally literate enough to have found their way to the OpenAI platform and can use the tool, there will be others who do not have access to a machine at home, or the bandwidth to make use of the internet, or even to have the internet at all. Merely accessing the tools can be a challenge.

The third aspect of AI implementation for individuals is around personal digital identity. Everyone, regardless of their age or context, needs to recognise that ‘nothing in life is free’. Whenever you use a free web service you are inevitably being mined for data, which in turn allows the provider of that service to sell your presence on their platform to advertisers. Teaching young people about the two fundamental economic models that operate online, subscription services and surveillance capitalism, MUST be part of ever curriculum. I would argue this needs to be introduced in primary schools and built on in secondary. We know that AI data models require huge datasets to be meaningful, so our data is what fuels these AI processes.

Faculty

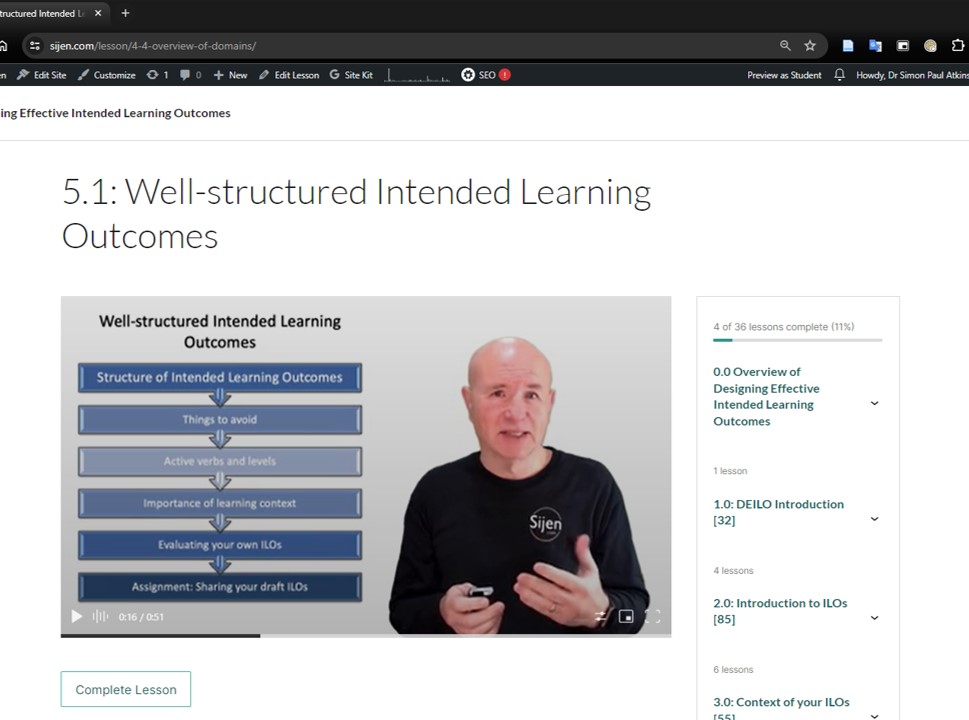

Undoubtedly faculty will gain through AI algorithms ability to provide real-time feedback and support, to continuously monitor a student’s progress and provide immediate feedback and suggestions for improvement. On a cohort basis this is proving invaluable already, allowing faculty to adjust the pace or focus of content and learning approaches. A skilled faculty member can also, within the time allowed to them, to differentiate their instruction helping students to stay engaged and motivated. Monitoring students’ progress through well structured learning analytics is already available through online platforms.

What of the in-classroom teaching spaces. One of the sessions at ELAI showcased AI operating in a classroom, interpreting students body language, interactions and even eye tracking. Teachers will tell you that class sizes are a prime determinant of student success. Smaller classes mean that teachers can ‘read the room’ and adjust their approaches accordingly. AI could allow class sizes beyond any claim to be manageable by individual faculty.

One could imagine a school built with extensive surveillance capability, with every classroom with total audio and visual detection, with physical behaviour algorithms, eye tracking and audio analysis. In that future, the advocates would suggest that the role of the faculty becomes more of a stage manager rather than a subject authority. Critics would argue a classroom without a meaningful human presence is a factory.

Institutions

The attraction for institutions of AI is the promise to automate administrative tasks, such as grading assignments and providing progress reports, currently provided by teaching faculty. This in theory frees up those educators to focus on other important tasks, such as providing personalized instruction and support.

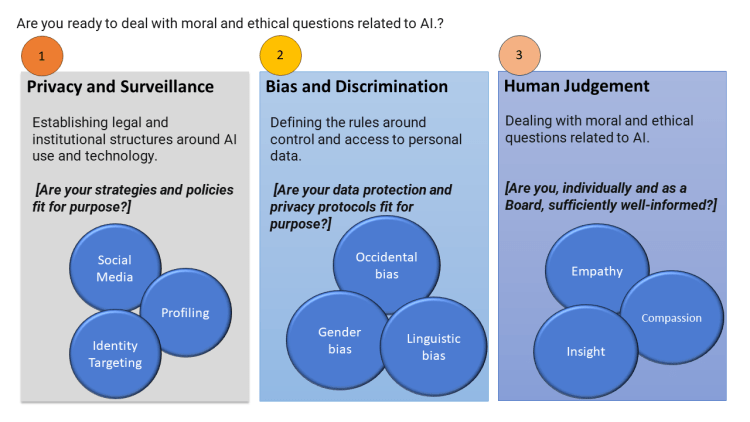

However, one concern touched on at ELAI was the danger of AI reinforcing existing biases and inequalities in education. An AI algorithm is only as good as the data it has been trained on. If that data is biased, its decisions will also be biased. This could lead to unfair treatment of certain students, and could further exacerbate existing disparities in education. AI will work well with homogenous cohorts where the perpetuation of accepted knowledge and approaches is what is expected, less well with diverse cohorts in the context of challenging assumptions.

This is a problem. In a world in which we need students to be digitally literate and AI literate, to challenge assumptions but also recognise that some sources are verified and others are not, institutions that implement AI based on existing cohorts is likely to restrict the intellectual growth of those that follow.

Institutions rightly express concerns about the cost of both implementing AI in education and the costs associated with monitoring its use. While the initial investment in AI technologies may be significant, the long-term cost savings and potential benefits may make it worthwhile. No one can be certain how the market will unfurl. It’s possible that many AI applications become incredibly cheap under some model of surveillance capitalism so as to be negligible, even free. However, many of the AI applications, such as ChatGPT, use enormous computing power, little is cacheable and retained for reuse, and these are likely to become costly.

Institutions wanting to explore the use of AI are likely to find they are being presented with additional, or ‘upgraded’ modules to their existing Enterprise Management Systems or Learning Platforms.

Conclusion

It is true that AI has the potential to revolutionize the field of education by providing personalized instruction and support, real-time feedback, and automated administrative tasks. However, institutions need to be wary of the potential for bias, aware of privacy issues and very attentive to the nature of the learning experiences they enable.

Get in touch with me if your institution is looking to develop its strategic approach to AI.

Image created using DALL-E